Enterprise use of AutoML is less automated than you think

This is a blog post was co-written by Britta Fiore-Gartland and Anamaria Crisan, and is associated with the paper “Fits and Starts: Enterprise Use of AutoML and the Role of Humans in the Loop.” For more information, read the paper or watch the video presentation from the CHI’21 conference!

Automated Machine Learning (AutoML) is transforming data science by making it more accessible to the broader spectrum of business users. Recent innovations have expanded the scope and applicability of AutoML tooling from data preparation, model monitoring, and even communication. In a labor market tight on Data Scientists, it’s not surprising that more organizations are interested in AutoML technology. But how are organizations actually using AutoML technology? And what are the implications for the role of humans in relation to new AutoML systems?

To answer these questions, we interviewed people at organizations of different sizes and across different sectors about their use of AutoML. What we found challenged prevalent assumptions of AutoML as primarily functioning to automate routine data work in organizations. Instead, we found a broader range of use cases that involve collaborative work throughout the process. We found that, in practice, AutoML is (mostly) a misnomer.

Based on our findings, we highlight multiple AutoML use cases and the important collaborative relationships between humans and AutoML systems that support successful use.

Use Cases for AutoML in Enterprise

Not all data science work is rocket science. Some of it is routine and procedural and could benefit from the consistency that automation affords. With some caveats about task complexity, the automation of routine work is a common use case for AutoML technology. This automation serves an important role as one of our participants observed :

“They [data scientists] could always do it better, but are they there? Do they have time? The answer is almost always, no. You can either do good or you can do nothing. Better is there, but you’re not getting better. They [data scientists] are busy, working on the big problems”

At the outset of our study, we expected that this was the primary use case but instead found other common ones that required different levels of human interaction.

We observed many people usingAutoML to help them ideate solutions for potential customers. In this use case, the analysts were less worried about the final accuracy of the AutoML model and were more focused on what it could help them imagine and communicate quickly. One participant summarized it as :

“ I talk to clients daily. If I could get ML done just real quick, as a prototype into what we could build (in depth), that would be super helpful. […] Can you get me 50% of the way to answering some question quickly? That would benefit me”.

Finally, others saw AutoML as an opportunity to expand data science techniques across the organization, often by putting them into the hands of experts that understand the data and business needs:

“[We] need our workforce to be more data savvy across the board. An engineer needs to be able to play with data as much as the MBA does […] [and] giving them better tools will help with ramp up.”

While these quotes paint a rosy picture of how AutoML can provide value across different use cases, in practice, we found that the uses of AutoML were creating other challenges. For one, our study participants placed a particular emphasis on how hard it can be to uncover errors and fix mistakes. One participant observed that when AutoML breaks down, “you’re only as good as what you debug.” These breakdowns often required considerable human labor to overcome or repair. One frequent example was the need to get better data for model training, which was not always easy or possible. A second challenge was most often identified by trained data scientists who were worried that AutoML technology made it easier for others to push incorrect or biased models out into production. They were concerned that AutoML made it possible “to slap together models and try to make decisions from it without understanding how things work.”

These formidable challenges created much organizational friction, and in many cases, introduced mistrust in the data work. Furthermore, the lack of good tools to enable oversight and observability of AutoML processes exacerbated this friction and mistrust.

Humans add unique lenses to AutoML

In digging further into these use cases, it became clear that AutoML in practice was actually a complex interaction between human mental models and the AutoML system. As one participant observed: “there’s human interaction along the whole life cycle […] interpreting that human interaction is what we’re trying to get machine learning to do”

So what do humans add to machine learning models? People bring their own lenses to data and data work. This lens can add meaning or context to what the AutoML system is doing. Importantly, these lenses are not uniform across an organization. Trained data scientists, business analysts, and executives each bring their own perspectives of the data, the analysis, and its interpretation.

Across the use cases we found that the lenses and mental models that people bring to the data science process are not fixed but rather are purposefully evolving throughout the analysis processes. People actively used these lenses to refine, refute, and replace steps in automated data science work.

Perhaps even more important was that AutoML in practice consistently had more than one person in the process. Data scientists and business analysts not only need to interact with AutoML systems but also with other people in their organizations. While AutoML systems may provide a partial solution, they do not yet support much of the collaborative work necessary for negotiating the different lenses stakeholders bring to the process. One participant commented on this tension :

I think they [executives] want you to use those [automated insights] to look at a graph and say, “Oh wow, this is life changing. Let’s go make this change in our business.” We didn’t use it like that. We used it to make sure that the results we were getting back made common sense.

At its worst, this tension across stakeholder perspectives makes it difficult to see the results of data work, automated or not, actually affect any real change. One participant summarized this as “the last mile problem” :

“The action of implementation of this in the market sometimes doesn’t always pull through. So it’s like we did all this work, you [the executive] said it was good, but now you have to take it to the last mile, actually get to marketing, creative, and content, and get it out to market.”

The right tools for humans and AutoML systems to work together

Even with the potential for AutoML to broaden the reach of data science processes within the organization, AutoML systems are not currently set up to overcome the last mile problem on their own. However, by acknowledging that success relies on the interleaving processes of humans and AutoML systems working together, we can build better tooling that supports both human-human and human-machine collaboration in data work.

Our participants provided us with insights as to what those tools need to look like.

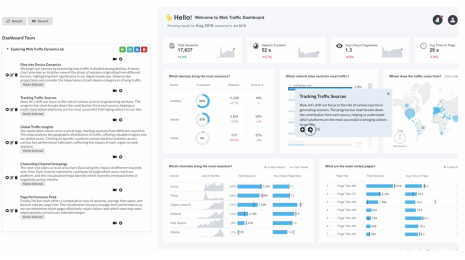

First, they need to be integrated with their other analytic processes. This integration includes data preparation, monitoring, and communication where AutoML technology has only recently been expanded to.

Second, these tools need to integrate human input thoughtfully. Human intervention needs to be added to select stages of the AutoML pipeline in specific ways. Most importantly, adding human intervention should not excessively slow down the speed gains of automation, for example, by requiring a lengthy process to visualize data. When human-in-the-loop tools are seen as unnecessarily interrupting AutoML processes they are often abandoned.

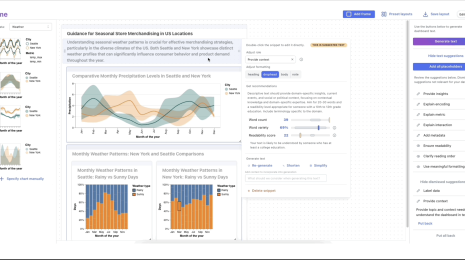

Third, visualization plays an important role in the tooling for human collaboration with AutoML. One participant emphasized that :

“the job is to deliver results of a model in some form[…] Data scientists aren’t going to deploy [a visualization], but they may use it for static reporting or just for their information”

While people acknowledged the value of visualization as a communication tool, they also expressed frustration with the challenges of making effective visualizations. One participant stated that :

“There is a gap once your analysis is done on presenting the results. Nobody wants to spend more hours in another tool to build charts for explanation.”

Another observed that to make “nice visualizations feels like a hack that the average user can’t build themselves.” Our participants shared a common challenge of building visualizations that effectively integrate not just with their larger data science tooling ecosystem, but also, their wider business processes.

The final, and arguably most important requirement for automated data science work is governance. Pushing data or biased models into production is a real concern raised by many participants. AutoML risks making it even easier to push inaccurate, or worse, biased, models into production and negatively impact people at a larger scale. While participants were aware of these issues, they struggled to know how to anticipate and address them in meaningful and scalable ways.

Where do we go from here?

While there is great enthusiasm for using AutoML in enterprise settings, there are still formidable challenges and gaps that often stymie successful use. Our research suggests these gaps exist, in part, because AutoML is a misnomer. While the technology automates aspects of the process, there is still a significant amount of human labor required to get a model to work. Taking a more expanded view of AutoML beyond the limited scope of the technology we see that there still remains a lot of human labor to overcome the “last mile problem” to affect any organizational change or business impact. Moreover, we observed many of the ways that people and AutoML systems work together even across fully automated work.

This work emphasizes the importance of thinking through all the different levels of automation across data science work. Sometimes it makes sense for AutoML to take the lead, other times humans need to be in control, and there are also lots of opportunities where a hybrid approach works best. Understanding the right levels of automation is a critical component of design and developing the right tools that support efficient, relevant, observable, and safe AutoML.

Once again, you’ll find more details and perspectives in our paper and CHI 2021 presentation. You can also see more posts from Britta Fiore-Gartland and Anamaria Crisan on Medium.