How Can I Trust Tableau Agent?

Are you ready to leverage Tableau Agent (formerly Einstein Copilot for Tableau) to scale out your organization analytical capabilities? This powerful tool can help you explore your data, generate insights, and build visualizations faster than ever before. But before you dive in, it's important to understand how Tableau Agent upholds Tableau and Salesforce’s #1 value: Trust. Let’s explore how the Einstein Trust Layer protects your data, maximizes accuracy of results, and allows for auditing. I’ll also try to cover the most common questions and concerns our customers have brought to our attention.

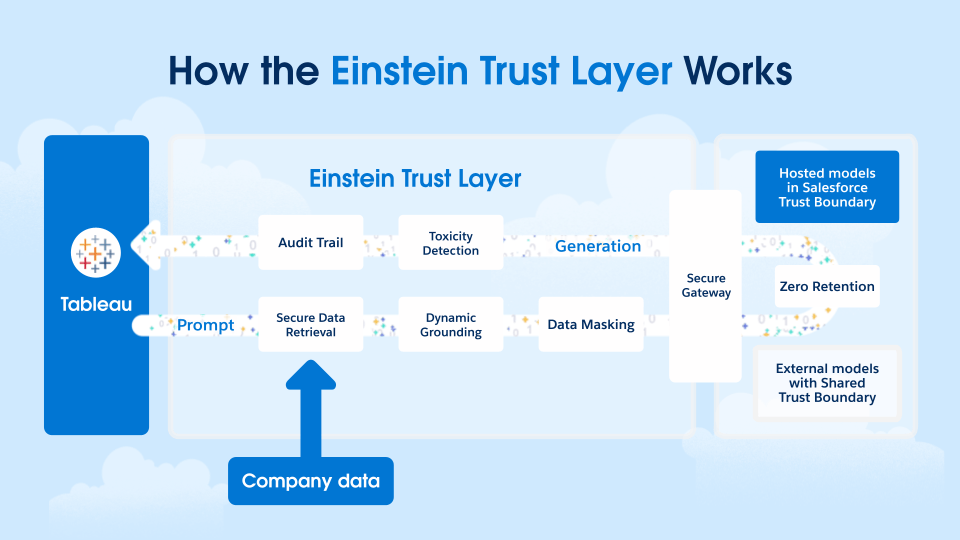

What is the Einstein Trust Layer?

The Einstein Trust Layer is a secure AI architecture, built into the Salesforce platform. It is a set of agreements, security technology, and data and privacy controls used to keep your data safe while you explore generative AI solutions. Tableau Agent and other Tableau AI features is built on top of the Einstein Trust Layer and inherits all of its security, governance, and trust capabilities.

Who has access to my data?

The most common question our customers ask about Tableau Agent is “Who gets access to my organization’s data, and what are they doing with it?” To answer this question, let’s start with what the Einstein Trust Layer promises for every feature that uses it.

Contractual agreements with all of our third-party LLM providers (Open AI and Azure Open AI) include a zero data retention policy. This means the Einstein Trust Layer guarantees that none of the data sent to LLMs are saved at the LLM. Once the prompt and response are processed by the LLM it forgets both the prompt and the response. This also means that none of your organization's data is benefiting anyone or anything outside of your organization; it will not be used to train any models, inside or outside the Trust boundaries of Tableau and Salesforce.

The prompts your users generate and the responses you receive from Tableau Agent are your property. While they are forgotten by the LLM, each Tableau Agent customer is also provisioned with their own Data Cloud instance where prompts and responses are securely stored. This data is available through the Trust Layer’s Audit Trail for auditing by your infosec teams.

Within Tableau Cloud and before any data hits the Einstein Trust Layer, Tableau Agent respects all permissions, row-level security (aka user filtering), and data policies for Virtual Connections. That means if a user does not have access to certain data on your Tableau Cloud site, they will not have access to it when they use Tableau Agent. Only the people within your organization that are meant to have access to certain data are the only people who do have access, regardless of whether Tableau Agent is involved or not.

What data gets sent outside of my Tableau Cloud Site?

To answer this we’ll refer to the architecture diagram above and dive into how Tableau Agent works. Tableau Agent only remembers the context of a datasource within a Web Authoring session. First, Tableau Agent scans the Tableau data sources that the workbook is connected to, and creates a summary context of the datasource consisting of:

- Field names and descriptions

- Field data type (String, numerical, datetime, etc.)

- For non-numerical fields, unique values in that field up to 1000 unique values (note: non-numerical fields with a cardinality > 1000 are ignored)

This scan and summarize task is done all within the confines of your Tableau site. The summarized results are sent to our third-party LLM provider to vectorize field names and unique values in non numeric fields. Vectorization of fields and unique values is important because it enables Tableau Agent to understand colloquial terms, abbreviations, and synonyms in user prompts. Imagine you have a dataset that includes a field called [Forecast_Sales] and [product_line] where one of the values is “sporting goods”. With vectorization, Tableau Agent is able to translate a user query like “How much athletic products are we projecting to sell in May?” to a visualization that shows [Forecast_Sales] for [product_line] = “sporting goods” in the month of May.

Because of our zero data retention policies in place with our third-party LLM providers, the summary context data is forgotten immediately after the vectorization happens.

PII Data

This is now a good time to talk about personally identifiable information (PII). By default, Tableau Agent enables Einstein Trust Layer’s data masking feature. Data masking uses pattern matching and machine learning techniques to identify the PII data in the prompts, and replaces PII characters with placeholder text based on what it represents before sending them to the large language models. Using placeholder text rather than generic symbols helps the LLM maintain the context in the prompt for more relevant results. Einstein Trust Layer then unmasks the response when it is returned to the user so the response has the relevant data. Tableau Agent masks the following data:

- Name

- Phone Number

- US SSN

- Company Name

- IBAN Code

- US ITIN

- Passport Number

- US Drivers License

Note that although our pattern and ML-based data detection models have been effective during testing, no model can guarantee 100% accuracy. In addition, cross-region, multi-country use cases can affect the ability to detect PII data. With trust as our priority, we’re dedicated to the ongoing evaluation and refinement of our models.

PII data masking can be configured in Einstein Trust Layer settings in your Salesforce organization that was provisioned as part of Tableau Agent.

Where does my data go?

- The region in which your Tableau Site resides in. This is where data in your workbooks and data sources reside

- The region in which your Salesforce org resides. The region in which your Salesforce org resides will influence which region your LLM requests are routed to. If the LLM used is available in your region, Geo-Aware LLM Request Routing will route your LLM requests to your region. However, we consistently evaluate state of the art models available on the Einstein Generative AI Platform to find the one that offers the best performance and accuracy for our customers. As such, we cannot guarantee the latest models used by Tableau Agent are available in the same region as your Tableau site or Salesforce org. Please refer Geo-Aware LLM Request Routing on the Einstein Generative AI Platform for more information

Why should I trust in the results Tableau Agent returns?

Trust does not only involve your data being secure. Trust also includes being confident that Tableau Agent will return accurate and safe results. While we have put techniques and controls to maximize accuracy and minimize harm, like all results returned from generative AI we cannot guarantee 100% accuracy.

Safe, unharmful results

Tableau Agent uses Einstein Trust Layer’s Toxicity Confidence Scoring to detect harmful LLM inputs and responses. Under the hood, The Trust Layer uses a hybrid solution combining a rule-based profanity filter and an AI model developed by Salesforce Research. The AI model is a transformer model (based on Flan-T5-base) trained on 2.3M prompts from 7 datasets that are approved by Salesforce’s legal team. This model provides scores on 7 different categories of harmful prompts and responses including toxicity (e.g. rude, disrespectful comments), violence, and profanity. Using benchmarks iteratively developed by the Einstein team, Tableau Agent filters harmful content and surfaces a warning to the user. Harmful content is stored along with its confidence scores, and is available for your teams to audit through Audit Trail.

Accurate results

We benchmark Tableau Agent's accuracy each release on a test dataset of over 1500+ (query, viz) and (query, Tableau Calculation) pairs from our internal use of Tableau—yes, we heavily use our own product! We’ve established a set of metrics to quantify the relevance and accuracy of LLM generated viz to human authored ones, and that approximates different ways a user can ask an analytical question to Tableau Agent, including:

- Canonical accuracy—measures how accurately the generated notional spec matches the expected notional spec.

- Semantic match accuracy—measures how accurately the generated notional spec matches the expected notional spec accounting for wildcard matches. Wildcard matches need to account for the multitude of ways a viz can be rendered (e.g., relative date filter vs. date range filter, multiple date fields to render trend line charts, etc.).

- Recall—the percentage of expected fields correctly returned by search retrievers and selected by LLM.

To get to this level of accuracy we use dynamic grounding (also referred to as retrieval augmented generation or “RAG”) by instructing the LLM to reference the following information included in our prompts:

- The metadata that describes your datasource (See "What data gets sent outside of my Tableau Cloud Site?" for what this metadata is)—this gives the LLM the context of your datasource and prevents it from hallucinating field that do not exist in your dataset.

- Historical chat history for the session—this enables Tableau Agent to understand demonstrative pronouns like this, that, these, those, to refer back to other words/concepts from earlier in the chat session.

- The current viz on your worksheet—this gives the LLM the context of your current viz so that it can make modifications to it accurately

In addition to dynamic grounding, we iteratively adjust how Tableau Agent handles ambiguity. For example, take a simple query like “Show me the last 6 months of sales by product,” If there are multiple datetime fields, it is not clear which one “the last 6 months” refer to. Currently, Tableau Agent will ask a follow-up question back to the user with the likeliest options for the datetime field prior to rendering the visualization.

What is the future of Trust in Tableau Agent?

Trust is not a one-and-done. Our teams working on Tableau Agent and the Einstein Trust Layer continuously iterate their features based on customer feedback so we can keep your trust in us. For example, we are currently developing a BYO LLM solution for customers where third-party LLM providers are a no-go. We’re also improving Tableau Agent’s ability to disambiguate by learning which measures and dimensions are commonly used together. We’re frequently trialing new and improved prompts and testing them against our benchmark dataset, and releasing ones that are both performant and accurate.

We at Tableau and at Salesforce understand that trusting generative AI with your company secrets and your customer data while balancing the benefits of generative AI is still a challenge today. I hope that this blog post answered some of your most pressing questions and concerns about Tableau Agent. If you have further questions about Tableau Agent or the Einstein Trust Layer, please feel free to leave a comment and I’ll try to respond. Alternatively, you can reach out to your Sales contact at Tableau, and they will be able to identify the expert in the area of your concern.