How Abbott Labs is helping scale COVID-19 testing data—and pushing the conversation on public health further

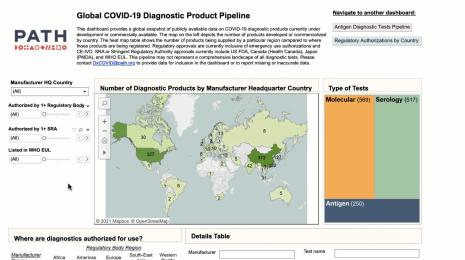

As COVID-19 has progressed, calls for more testing and more data have only grown more pronounced. As a data company, Tableau backs up these calls—we know that the more data we have on a situation, the better we can understand it and take action against it. To dive deeper into what the testing data landscape actually looks like in the U.S.—how complex it is, and what it can tell us about the state of the virus—we turned to the people right in the middle of it.

Jeremy Schubert is the VP of the diagnostics division at Abbott Laboratories, one of the world’s largest medical device and healthcare companies. Abbott Labs has created and distributed multiple tests for COVID-19 and is running diagnostics on them with their laboratory instruments. The company is manufacturing and distributing millions of tests per month. The company has a unique insight into exactly what it takes to scale up testing and the data that comes from it, as well as what we should be thinking about when we consider the potential for data to inform our understanding of health and healthcare in the U.S.

The value—and the limits—of testing data

Even though Abbott Labs is directly contributing to the critically important backbone of COVID-19 testing in the U.S., Schubert is adamant that focusing on the data derived from testing alone will not provide a complete picture of the pandemic. “COVID-19 is more than a healthcare problem—it’s a public health problem that impacts and is impacted by healthcare and healthcare systems,” he says. “Effective pandemic management begins with a clear and broad understanding of the state of the pathogen, and we need more clinical testing to really understand that.”

Widespread testing is absolutely vital for understanding where the virus is and how it’s spreading. But laboratory testing in a vacuum isn’t enough to truly understand the impacts of COVID-19, Schubert says. “Health is more than physical, and there are a number of domains that surround health.” Everything from mental well-being to social determinants of health like housing status or access to healthy foods could be impacted by the pandemic. While diagnostic testing needs to scale up so the data on the virus can more accurately reflect the reality of the situation, the conversation on data and COVID-19 shouldn’t end there.

Schubert gives this example to illustrate what other data can show about the impacts of COVID-19: In the interviews Abbott Labs has done with senior executives of health systems across the US, they’ve consistently heard that the number of emergency room visits for heart attacks and strokes are down. “Now, people didn't stop having heart attacks and strokes—they just stopped showing up to the ER,” Schubert says. “And I don't think we fully understand the massive impact that this virus has had, not just directly for those who have had COVID-19,but for those who have had to defer care and face an inability to live a full life.” Collection efforts around these data and many more can supplement the understanding we derive from testing and diagnostic data.

Delving into the process of collecting testing data

Even though it’s clear to all of us data people that we need more diagnostic testing data, there’s so much we don’t know about the systems that produce that data. What does it take for a COVID-19 test result to make it into a data set? What are the systems responsible for running the test and producing the result?

For the two key COVID-19 tests—the viral test and the antibody test to determine if someone had the virus at some point—there’s an end-to-end process at work, Schubert says. “It’s not just about the processing of the sample in the instruments—there’s so much work done pre and post processing that creates the most dynamic testing, and shows why our healthcare workers and lab professionals are so important,” he adds.

The process—from a person deciding to access a test to that result showing up in a local report—is multi-step, each with their own KPIs and logistics that data is essential to manage:

- Patient recruitment and entry. “You may think ok, this is a nominal element,” Schubert says, “but from a population health perspective, considering the way people access testing is important, particularly if you think about the social determinants of health and what it means if somebody doesn’t have access to transportation.”

- Ordering a test. “There’s a lot more we have to do here than draw the blood or take a sample on a swab—there’s a degree of documentation that has to take place in a computer system to record what’s been done,” Schubert says.

- Labeling the sample. Precision is key to make sure the sample is not lost in the shuffle.

- Transporting the sample to the lab. “If you think about the thousands of samples that might roll into a lab at any given time, there’s all this data entry that’s required to ensure the samples were received, and that additional data is added to support it,” Schubert says.”

- Sorting the samples. “There’s not just one universal box that everything runs through—there are different departments that may be on different floors in different buildings and run on different instruments. The samples might have to be sorted multiple different times to be in a position where they can be processed.”

- Preparing the samples. Lab workers may have to centrifuge a sample or treat it in a particular way before it’s sent to the instruments to be processed.

- Investing in ongoing instrument readiness. “Lab instruments aren’t always just sitting there, ready to go like a laptop computer you leave plugged in all the time,” Schubert says. “There’s maintenance, there’s quality control that may have to be run, to ensure the instruments are ready to process samples.”

- Conducting laboratory analysis. Once an instrument processes samples, the laboratory professionals have to validate the results and make sure that the results they’re seeing coming off the instrument make sense. “There may be some additional steps of calling a physician if there’s a question or for more information, or even of re-running the sample,” Schubert says.

- Releasing the result. This could be done automatically or manually to ensure that the patients and physicians have access to the result of the test.

- Managing the sample. Once the test is done, the lab has to decide if they store the sample for a period of time, and when it’s safe to dispose of it.

This is a meticulous process to ensure that a person’s experience with symptoms or potential exposure to a virus is translated as accurately as possible into data that can inform the broader understanding.

Understanding what it takes to scale and speed up testing

Even though the process Schubert outlines above leans heavily on “excellent automation and process flow, there’s a huge amount of human involvement with the process,” Schubert says. So calls to scale up testing and data availability in the U.S. are not just about increasing the volume of tests produced and machines that are running them. “If there’s a surge in volume, even a good process can be outworked by it,” he says. “There are people who have to integrate this surge into the day to day workload they already have.”

There’s no question that when it comes to testing and data collection for this pandemic, speed is critical. Especially for virus testing for people currently experiencing COVID-19 symptoms, there’s a desire to get a result as quickly as possible. But the potential for bottleneck along the multi-step processing system is high, Schubert says, particularly because the machines that process swab samples operate at a fairly low capacity for the amount of space they take up in a lab. Manufacturers across the U.S., with the support of the FDA, are working diligently to scale up capacity for virus testing and meet the demand for speed, but it’s not a completely seamless process. Schubert does note that for antibody testing, which relies on blood samples, does not face the same capacity constraints because the testing infrastructure is more widely scaled already.

The next frontier of COVID-19 data

Beyond simply scaling the testing system, Schubert says there is also a much broader effort underway to take testing data beyond clinical results to really managing the public health ramifications. “As we move forward, we’re going to move away from a simple system where we’re treating patients and reporting those numbers, to something much bigger,” he says. That means combining testing data with those on social, environmental, and economic factors to understand the impacts on individual well-being beyond the virus itself.

Using expanded testing data as a jumping-off point for opening up a conversation about the data we have on all aspects of health in the U.S., Schubert says, “can build a ton of confidence in the population about their well being, and can take away a lot of the confusion, the worry, the concern, which at the end of the day is weighing heavily on many of our communities. Doing that can get us to a quicker return to life. Maybe it’ll be a different world, but a world that from a public health perspective will be better.”