Using the Hyper API to Union Hyper Files

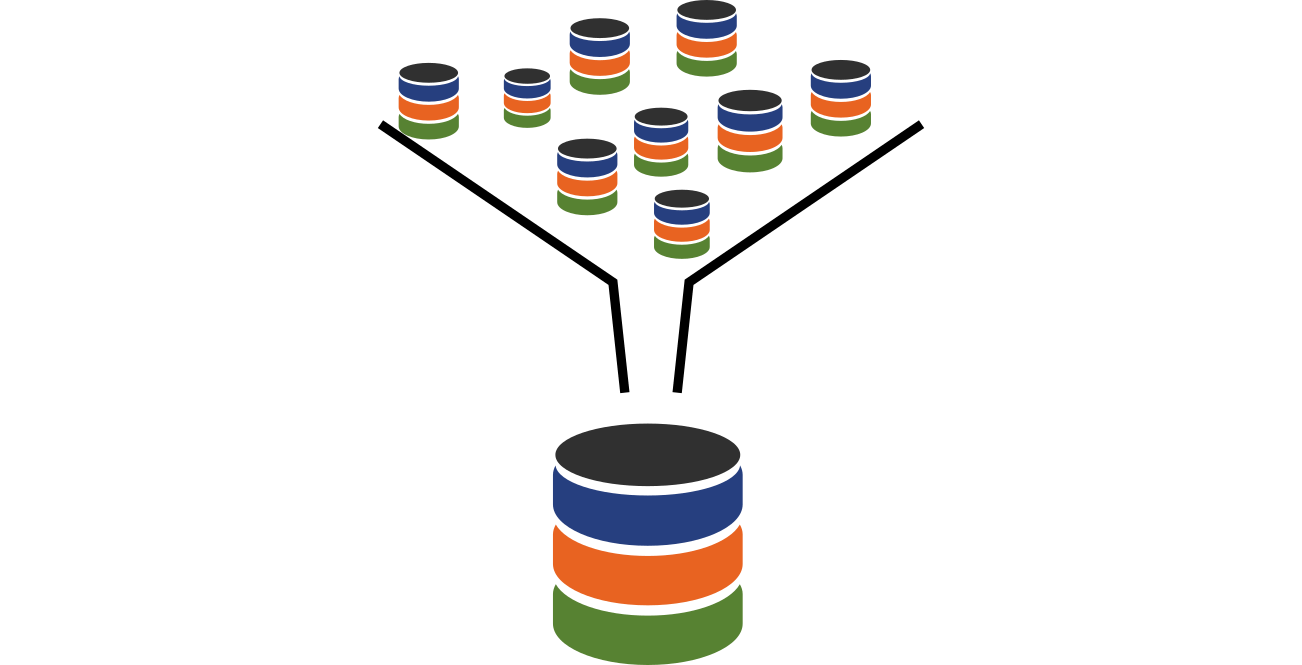

The Hyper API allows you to not only write data into Hyper files but also to read data from Hyper files. Thereby it enables a plethora of new use cases. The one we will focus on in this blog post: unioning Hyper files directly using the API. We will turn multiple smaller Hyper files into one large Hyper file.

It’s easy to throw together a quick Python script that works but is comparatively slow. Your first instinct to improve performance might be: Fine, let’s tune the hell out of it, let’s implement it in C++ or even better in assembly! But while I personally love C++, that would be overkill here. No, in this blog post, we are going to show you how to get optimal performance for unioning Hyper files — and all of that while staying in Python.

If you are just looking for some code to copy & paste, you can find it in this Gist. If you just want to union some Hyper files, and don’t care about writing your own script or understanding the internals of that script, I can recommend Timothy Vermeiren’s script which comes with a pre-bundled Windows executable, so you won’t even have to install Python or anything.

The scenario

We are going to use the well-known “World Indicators” data set which ships as part of the “World Indicators” demo workbook with Tableau.

However, somehow, our data ended up split into multiple files: instead of one “WorldIndicators.hyper”, we have 12 of the them:

WorldIndicators_2000.hyperWorldIndicators_2001.hyperWorldIndicators_2002.hyper…WorldIndicators_2012.hyperand we would like to combine all of those together into just one Hyper file.

In this blog post, we will be using Python. If you want to follow along, please install the Hyper API for Python. Next, download the input files and unzip them.

The inefficient solution: Processing the rows in Python

We first take a look at a correct but slow solution. This is not the recommended way to union your data. It still shows some valuable tricks (such as copying over a table definition from one file into another file).

First, let’s find all the Hyper files we want to combine. We can use a simple glob pattern for that.

from glob import glob

input_files = glob("WorldIndicators_*.hyper")Furthermore we need to know from which table within the Hyper file we want to read the data from. Let’s simply declare a global variable for this for now:

from tableauhyperapi import HyperProcess, Connection, Telemetry, TableDefinition, TableName, SchemaName, Inserter, CreateMode

table_name = TableName('Extract','Extract')Now, let's go over all those files and read all the data out of them:

# Read the data out of all files

# DON'T DO THIS! This is an counterexample which shows how not to write this code.

# Further down, we will show you how to do this more efficiently

unioned_data = []

# Start a new Hyper instance

with HyperProcess(Telemetry.SEND_USAGE_DATA_TO_TABLEAU) as hyper:

# Go over all the input files

for file in input_files:

# Connect to the Hyper file and read all data from it

with Connection(hyper.endpoint, database=file) as connection:

unioned_data += connection.execute_list_query(f"SELECT * FROM {table_name}")Note how we are using SELECT * instead of listing the column names individually. Thereby, we can use this script against arbitrary Hyper files, no matter what the columns are actually called.

The query is composed using string operations, namely the f-string feature of Python. Seeing how we build this query out of string operations, one might become scared of SQL injections. Luckily for us, table_name is a QualifiedName and Hyper API made sure that its to_string method takes care of proper quoting. Still, in case you don't know what SQL injections are, I urge you to read up on it. SQL injections are among the most common security vulnerabilities.

Now that we have all our data in the unioned_data list, we want to store it into a new file. Before we can insert the data into a new file, we first need a table within that file, though. This table should have the same columns and column types as our input tables. For simplicity, let's just assume that all our input files have the same columns (otherwise we would fail, anyways) and just duplicate the table definition from the first input file into our output file:

output_file="WorldIndicatorsMerged.hyper" with HyperProcess(Telemetry.SEND_USAGE_DATA_TO_TABLEAU) as hyper: # Get the table's schema from the first input file. # We will just assume that all files have the exact same schema. with Connection(hyper.endpoint, database=input_files[0]) as connection: table_def = connection.catalog.get_table_definition(table_name) # Reset the table_def.table_name table_def.table_name = table_name # Create the same table in the target database with Connection(hyper.endpoint, database=output_file, create_mode=CreateMode.CREATE_AND_REPLACE) as connection: # Create the output table connection.catalog.create_schema(SchemaName(table_name.schema_name)) connection.catalog.create_table(table_def)

For the most part, this is straightforward: We get the table definition from the first input table and then pass it to create_table to create the same table in the output file.

However, there are two stumbling blocks on our way:

- We need to reset the table_def.table_name since the table definition returned by catalog.get_table_definition also contains the database name of the database we executed it against. E.g., it reads "WorldIndicators2017.Extract.Extract". We want to recreate the table in a different database, though, and hence we reset it to "Extract.Extract".

- We first need to create the schema "Extract" before we can create a table within it.

With that out of the way, all that remains to be done is to insert the data into our new table:

with HyperProcess(Telemetry.SEND_USAGE_DATA_TO_TABLEAU, 'unionfiles_inefficient') as hyper:

with Connection(hyper.endpoint, database=output_file) as connection:

# Insert the data

with Inserter(connection, table_def) as inserter:

inserter.add_rows(unioned_data)

inserter.execute()Note how we can simply pass the list of unioned tuples into add_rows. No need to add the rows one by one.

And voila, we have our unioned output file 🎉🎉🎉

However, this approach has one large obvious inefficiency: We restarted Hyper multiple times and reconnected more often than necessary. Let's quickly fix that by restructuring our code. While at it, let's also add a few timing outputs to get a feeling for how fast our code is:

https://gist.github.com/vogelsgesang/1c1e72975e89565a547c45fc75a940c4#f…

which will create the output

0.21025586128234863: Reading WorldIndicators_2007.hyper 0.27318382263183594: Reading WorldIndicators_2011.hyper 0.33381152153015137: Reading WorldIndicators_2002.hyper 0.38861918449401855: Reading WorldIndicators_2004.hyper 0.44709086418151855: Reading WorldIndicators_2009.hyper 0.5095717906951904: Reading WorldIndicators_2008.hyper 0.5679950714111328: Reading WorldIndicators_2010.hyper 0.626805305480957: Reading WorldIndicators_2001.hyper 0.687401294708252: Reading WorldIndicators_2012.hyper 0.7462217807769775: Reading WorldIndicators_2005.hyper 0.8062338829040527: Reading WorldIndicators_2003.hyper 0.8644604682922363: Reading WorldIndicators_2000.hyper 0.9244029521942139: Reading WorldIndicators_2006.hyper 0.9844169616699219: Inserting data...1.2255094051361084: Done :)

All in all this took 1.134 seconds. While for those rather small Hyper files, the runtime is acceptable, it is still slower than it could be.

Furthermore, this approach does not scale to larger datasets. After all, we are keeping all our data in memory within the unioned_data array. As soon as your data is larger than a few gigabytes, Python will have serious trouble handling this amount of data.

Let's see if we can do better.

The recommended solution: Let Hyper do the work

The key insight to a more efficient solution is:

Hyper allows you to work on multiple database files within the same connection.

Using this capability, we can instruct Hyper to directly move data between various Hyper files without ever moving a single row to Python. And Hyper is much faster at moving data around than Python.

So, how do we do we actually tell Hyper to access multiple Hyper files? We do so using the attach_database method like so:

from tableauhyperapi import HyperProcess, Connection, Telemetry

hyper = HyperProcess(Telemetry.SEND_USAGE_DATA_TO_TABLEAU)

# Don't pass in any database to connect against.

# We want to start with an "empty" connection.connection = Connection(hyper.endpoint)

# Let's "attach" two databases to our connection

connection.catalog.attach_database("WorldIndicators_2000.hyper", alias="input1")

connection.catalog.attach_database("WorldIndicators_2001.hyper", alias="input2")Hyper loaded both databases. We can now access both databases through SQL by specifying our tables using the syntax <database alias>.<schema name>.<table name> where database alias is the alias we provided when calling attach_database. Let's quickly peek into our two databases:

print(connection.execute_list_query( 'SELECT "Year" FROM "input1"."Extract"."Extract" LIMIT 1'))

# Output: [[Date(2000, 12, 1)]]

print(connection.execute_list_query('SELECT "Year" FROM "input2"."Extract"."Extract" LIMIT 1'))

# Output: [[Date(2001, 12, 1)]]That looks about right 😃

Next step: Let's use our SQL knowledge and let Hyper directly Union both tables:

unioned_data = connection.execute_list_query(''' SELECT * FROM "input1"."Extract"."Extract" UNION ALL SELECT * FROM "input2"."Extract"."Extract" ''')

print(f"unioned data has {len(unioned_data)} tuples")

# Output: unioned data has 416 tuplesAlmost there...

Now we would like to store the UNIONed data into a new table without moving it through Python. Turns out, this is also possible, thanks to CREATE TABLE AS:

connection.execute_list_query('''

CREATE TEMPORARY TABLE unioned_data AS

SELECT * FROM "input1"."Extract"."Extract"

UNION ALL

SELECT * FROM "input2"."Extract"."Extract"

''')

unioned_data_len = connection.execute_scalar_query(

'SELECT COUNT(*) FROM unioned_data')

print(f"unioned data has {unioned_data_len} tuples")

# Output: unioned data has 416 tuplesSo with those building blocks, we are almost done. All we are missing is persisting the newly created table into a separate Hyper database.

But before moving on, let's be polite and clean up after us. Since we aren't using Python's with this time, we should close the connection and shutdown the hyper server:

connection.close() hyper.close()

Perfect, now let's jump right into the final version of our little script:

https://gist.github.com/vogelsgesang/e83260fd3e1429aefed99ad30a27f196#f…

which outputs

0.4374821186065674: Attached all input databases... "Extract"."Extract" 0.4706258773803711: Prepared output database 0.7011048793792725: Done :)

Yeah, we were twice as fast this time! 🎉🎉🎉 Given that each of our inputs only had 213 rows, that's pretty impressive. Of course, for larger inputs the difference only gets larger. I am looking forward to hear from your local experiments on hopefully larger files!

The code by itself should be pretty much self-explanatory:

- Spawn a HyperProcess and connect to it

- Attach all our input databases to our connection

- Prepare an output file and also attach it to our session

- Format a SQL query which stores the UNIONed results into our new database

- Done :)

The formatting step might be a bit hard to read for non-Python devs. For everyone who isn't fluent with Python formatting, this is the generated query:

https://gist.github.com/vogelsgesang/1e5b47e5c3c91f087eb6a8ad6cdc15b8#f…

Pretty straight-forward - feel free to reimplement in your favorite language.

Although, at least from a performance perspective there isn't really a reason to not just stick with Python here. With this solution, Python is only formatting the queries and sending them to Hyper. The actual work happens in Hyper. Python code never touches the individual rows which we are copying between the files. And at least for string processing, it really doesn't make a difference if you use Python, C++ or hand-optimized Assembly.

Conclusion

In this blog post, we looked at two implementations to UNION a set of Hyper files. We first looked into a straight-forward solution which consisted of reading all data from all input files, unioning it in a Python list and then inserting all rows into the output file.

This approach has the large drawback, that all data is moved through Python. And it turns out that Python is not exactly the most efficient language for per-row operations.

However, Hyper actually allows you to send SQL commands referencing tables from multiple different Hyper files.

With that capability, we can express our complete UNION in a single query. This query takes care of both unioning the data and inserting it into the output table. That way, we make sure that Python never touches the individual tuples. All tuples stay within Hyper and as it turns out, Hyper is pretty efficient at processing data rows - which is little surprise since this is exactly what Hyper was built for.

Bonus round: Splitting Hyper files

You might wonder how I ended up with the “WorldIndicators.hyper” split into one Hyper file per year. Well, it’s probably of little surprise: I used Hyper API for that. And again, I reused the same trick and asked Hyper to split that file for me. Here is the script: