Ethical AI: A business priority needing attention and partnership

Editor's note: This article originally appeared in Forbes, by Kathy Baxter, Principal Architect, Ethical AI Practice, Salesforce

Whether as a means to become more data-driven, drive exponential growth or differentiate themselves from competitors, it’s clear that artificial intelligence (AI) development is a high priority for enterprises, with worldwide AI spending expected to hit $110 billion in 2024. And of executives focused on AI, 80% are struggling to establish processes to ensure responsible AI use. Unfortunately, the speed at which companies are developing and/or implementing AI technologies is not being met with the same level of urgency and commitment needed to develop AI responsibly.

Developing ethical AI is not a nice-to-have, but the responsibility of entire organizations to guarantee data accuracy. Without ethical AI, we break customer trust, perpetuate bias and create data errors—all of which generate risk to the brand and business performance, but most importantly cause harm. And we can’t ignore that consumers—and our own employees—expect us to be responsible with the technology solutions we create and use to make a positive impact on the world.

It doesn’t matter if you’re a leader of a company creating technologies that rely upon AI applications, or if you’re a leader at the companies that choose to embrace the technologies, you must understand the complexities, risks, and implications of ethical AI use while democratizing data for all.

Responsible AI use: Table stakes for business

Developing and deploying AI solutions is becoming increasingly complex as biased algorithms and data continue to spread harm and receive broader public attention and scrutiny—from media reports on questionable company activity to global surveys examining citizen trust and expectations of AI use. Of course, we also can’t ignore the proposed policies detailed below. People are learning about inherently biased, facial-recognition technology that misidentifies criminals based on their race, historically biased loan applications rejecting applicants based on ZIP codes, and more.

Creating ethical AI is the responsible thing to do for society—and it also happens to be good for business. With consumer trust waning, we have to act with speed and urgency, before we experience long-term, irreparable damage to brand reputations, increased penalties for regulated industries, or even loss of life.

Creating responsible AI solutions supports an organization’s bottom line, and while some development practices are voluntary, existing—and forthcoming—legislation makes responsible AI a legal requirement. As this field evolves, so do the governance controls, policies, and penalties related to it. Governing bodies in the European Union, Canada, and the U.S. have drafted various regulations for ethical AI, hoping to address bias in algorithms (with the Algorithmic Accountability Act), protect consumer data (with the General Data Protection Regulation), and hold businesses more accountable. The U.S. Federal Trade Commission isn’t shy about taking action against businesses using racially biased algorithms, considered to be “unfair or deceptive practices,” which are prohibited by the FTC Act. The FTC indicated that “if businesses fail to hold themselves accountable for all actions—both human and AI—they should ‘be ready for the FTC to do it for [them].’”

The list of reasons to develop AI ethically is long—for some, it’s to positively impact society, and for others, it’s trying to avoid penalties. But whatever your motivation or end goal, there are key steps to follow and measures to keep in mind.

How to establish ethical AI practices

At Salesforce and Tableau, we strive to develop and implement AI responsibly to reduce bias and mitigate risks to the company and the customers we serve. Customers have trusted the Salesforce and Tableau platforms to help them securely see and understand their data and experience success from anywhere—a responsibility we don’t take lightly. Trust is our number-one company value, and to maintain trust, we’re committed to building ethical products and empowering customers with practical resources and information to apply ethical standards in their own use and development of AI.

Getting started: the Ethical AI Practice Maturity Model

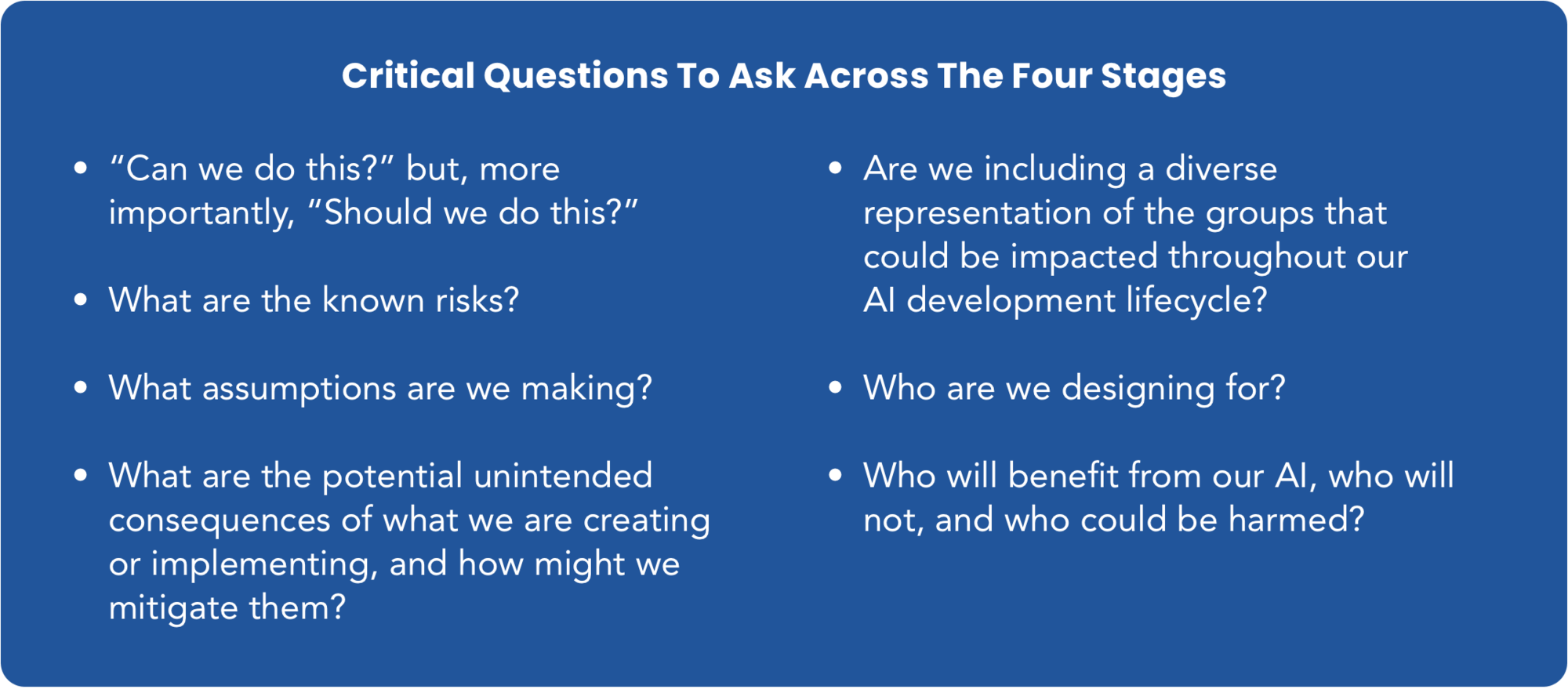

In the course of building innovative platforms, supporting customers, and having relevant industry discussions about ethical AI, we’ve identified a maturity model—one that’s been effective in helping companies build an ethical AI practice. The Ethical AI Practice Maturity Model walks step-by-step through four critical stages to start, mature, and expand ethical AI practices so your organization is better equipped to safeguard customers and shift from intention to action. Across the four stages, asking critical questions will aid your efforts to help reduce bias, mitigate brand and legal risk, and avoid harm to the customers you’re creating for or the people who are tangentially touched by your work.

Stage 1: Ad hoc

This stage is about raising awareness around ethical AI and educating your workforce. This is the time to get teams thinking about whether or not certain AI features should be developed, the potential unintended consequences, and more. You may also experience individual advocates generating small-scale strategies and working to earn buy-in.

Stage 2: Organized and repeatable

Whether leaders need to hire externally or promote from within, you need dedicated individuals and teams to establish and maintain ethical AI practices and execute on a strategic vision. This is the time to formalize roles, commit resources, and collaboratively develop guiding AI principles.

Stage 3: Managed and sustainable

With the dedicated people or teams in place to help execute, this stage includes the work of building formal processes into your development lifecycle. Create meaningful rewards and consequences for launching products, and without accruing ethical debt, to have an AI practice that is viable long term and encourages responsible thinking and behaviors.

Stage 4: Optimized and innovative

With the vision and dedicated support, your organization can now bake ethics into all levels across the business—from product development to engineering and privacy to UX—working together to build in ethics-by-design for all products and solutions launched in-market.

Key considerations along the journey

Worth calling out are critical elements that come into play in Stages 2 and 3, respectively: having trusted AI principles and implementing the Responsible AI Development Lifecycle.

- Make sure there’s alignment on the trusted AI principles with key stakeholders, solicit support and commitment to them, and articulate or publish them for easy reference. Learn more about the Salesforce AI principles of responsibility, accountability, transparency, empowerment, and inclusivity, which may inspire the creation of your own set that guides the work of your ethics team, company, and employees.

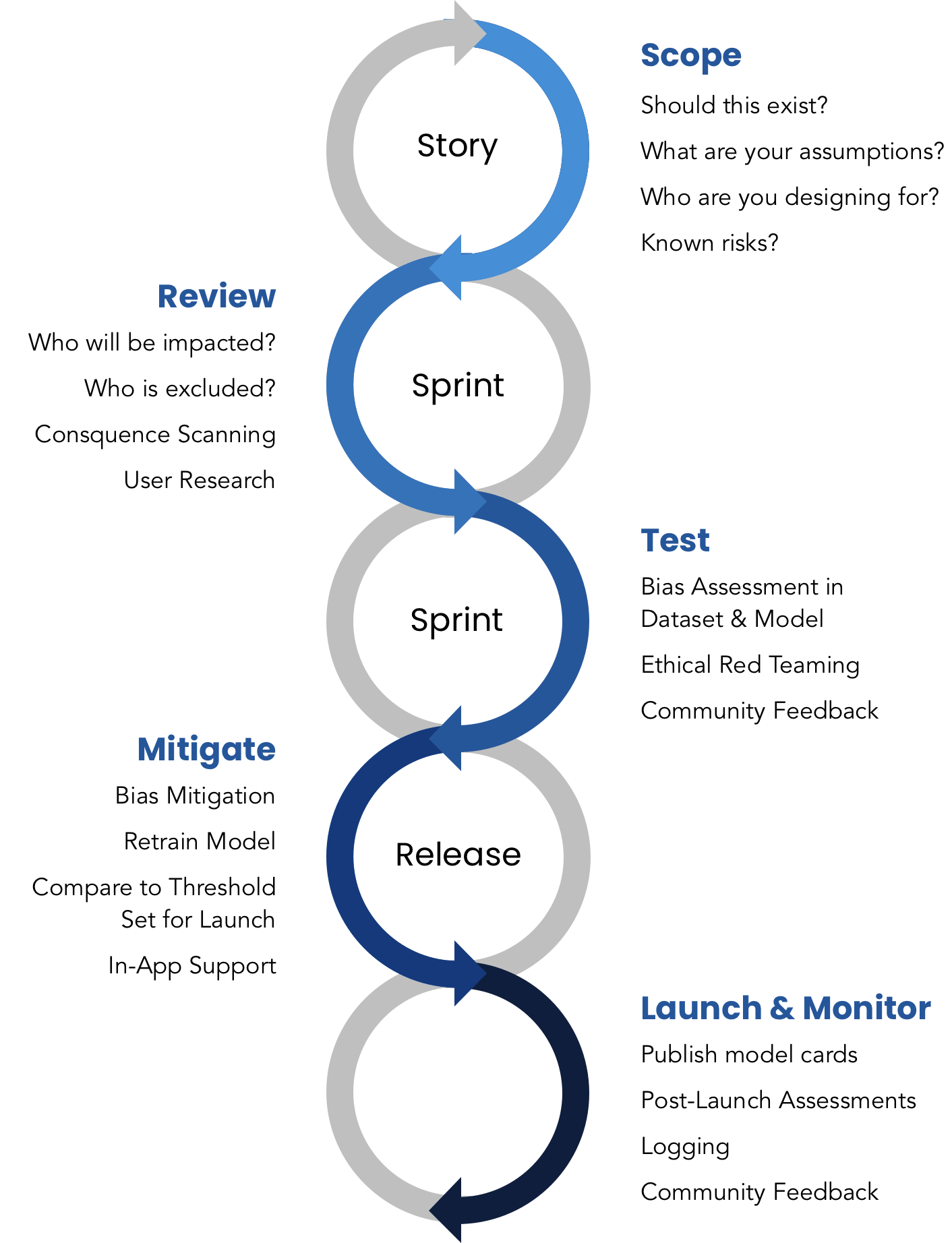

- The Responsible AI Development Lifecycle follows the Agile Development Lifecycle stages of Story, Sprint, and Release, and the critical elements as you move from scope to testing, and a final launch and monitoring stage are detailed.

Where to next?

Getting AI right takes time and resources and isn’t easy, but it’s more important than ever. When we ensure that AI is safe and inclusive for all, it’s a win-win for everyone—creating positive outcomes for industries, businesses, customers, and society. When we get it wrong, the repercussions can be detrimental, potentially resulting in harm.

Together, Tableau and Salesforce focus on delivering solutions to customers, partners, and employees that support effective, ethical AI use, working with industry colleagues in developing valuable, end-to-end resources. Check out this how-to guide, relevant data analytics innovations that support smarter decision making or expert guidance on the ethics of AI as you embark on the next steps to develop AI responsibly.