Massive Serverless Parallelization

Who would have guessed that being able to quickly crunch data would be valuable to Tableau’s Prototyping team? Whether we need to comb through gigs of log files or test millions of permutations of columns in a data table, parallelization allows us to deliver prototypes that are responsive and compelling.

In Building Serverless Prototypes, we introduced our team, and explained why we prefer serverless architectures:

At the Tableau Prototyping team, our mission is to drive innovation through exploring and prototyping new ideas. Often these are most easily proven out through simple web apps that allow users to explore our ideas and allow us to verify our projects’ usefulness. We want to build fast and stay flexible — spending large amounts of time to set up a bespoke infrastructure for each of our new projects takes away from the time we have to work on core algorithms or project innovations.

In this post, we’ll explore our recipe for implementing parallelized computations using AWS Lambda, S3, and DynamoDB. We’ll also discuss some approaches we tried along the way that did not work.

To develop and test our parallelization recipe, we came up with a “Hello, World!” for parallel computing — a simple task that naturally lends itself to parallelization. Our task was to determine most frequent of words in a large text file for each length of word (1–20). We were able to accomplish this in 7 seconds.

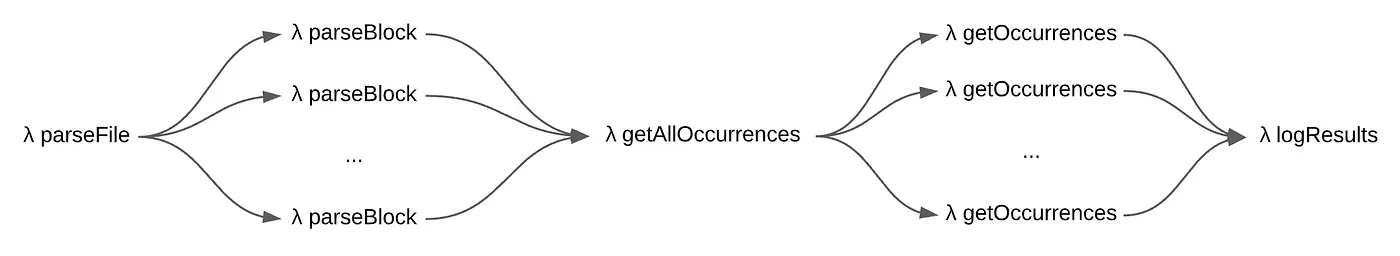

We’ll dive into implementation details soon, but let’s start with the high level algorithm. We take a 1 gigabyte file from the Wikipedia dump and segment it into many smaller blocks. Each block is processed in parallel using a standard MapReduce pattern using AWS Lambda. Each Lambda reads the assigned block and populates 20 in-memory dictionaries (one for word length). These dictionaries are our word frequencies for this block, and are persisted in JSON files on AWS S3. We then start another MapReduce stage where each word length is processed in parallel, reading the word frequencies from S3 for all blocks for the specified word length and summing the frequency counts. The top 10 frequent words for the word length are saved on DynamoDB. Finally, the top 10 frequent words for all word lengths are read from DynamoDB and written to AWS CloudWatch.

Let’s take a closer look at the Lambdas that facilitate the above algorithm.

λ parseFile

This is the entry point for our entire workload. It partitions the large text file into 5-megabyte blocks and invokes λ parseBlock in parallel for each of these blocks. We limited the maximum concurrency to 250. It waits for the completion of all of the parallel λ parseBlock invocations before finally invoking λ getAllOccurrences to continue our orchestration. Libraries like p-map make it easy to implement this MapReduce pattern:

[table 1 goes here]

λ parseBlock

This lambda reads the text file from s3 for the specific block range and keeps track of the frequencies of each word. The frequencies are bucketed by word length. This lambda has to deal with words that are cut off at the edges of the block: A split word at the start of the block is ignored, and a split word at the end of the block is read by over-fetching some bytes beyond our block’s specified range.

After reading the block, we need to save the word frequencies so that Lambdas further down in our orchestration can consume them. DynamoDB has a 400KB limit on item sizes, and these word frequencies were more blob-like in any case, so we store them in S3. In order to retrieve the object in the future, we use a prefix that is a hash of the length of the word and the block id.

Recall that we limited this phase to a concurrency of 250. This is because S3 can fail with 503 Slow Down errors if given a sudden burst of requests. If we needed to improve our performance further, we could overcome this by gradually scaling up the concurrency or by implementing retries with exponential backoff, however we opted for the simpler concurrency limit since 7 seconds was sufficient for our needs.

We encapsulated this s3-backed blob storage with the following:

[table 2 goes here]

λ getAllOccurrences

This simply kicks off the second MapReduce phase using λ getOccurrences — one for each word length (1–20). It reuses the mapReduce function described earlier.

λ getOccurrences

Recall that each λ parseBlock stored the word frequencies for each block via the BlobStore described earlier. In this lambda, we read all of those frequencies for all of the blobs of a specific word length and sum them. We also keep track of the 10 most frequent words. The final result is stored in DynamoDB.

λ logResults

The final lambda in our orchestration reads the results from DynamoDB and logs them using CloudWatch.

Things that didn’t quite work…

At this stage, we have a recipe that we are satisfied with; it is fast, easy to implement, and costs nothing when our prototypes aren’t in use. Along the way, we experimented with a couple other ideas. Here’s what didn’t quite work.

Over-parallelization

One of our first attempts stored the word frequencies directly in DynamoDB, using the words as keys. As we read words in the file, we’d update the count for that word in DynamoDB. This approach failed for two reasons. First, atomic counters built on DynamoDB should only be used when minor overcounting or undercounting can be tolerated. Secondly, we quickly exceeded DynamoDB’s allowed throughput. The table throughput is a soft limit and we were able to ask AWS to increase it for our account. However the partition limit is a hard limit, so we had to find another way.

AWS Step Functions

This service provides an orchestration framework for lambdas. However it is quite slow — taking over 2 minutes to execute the lambdas above (compare this to the 7 seconds it takes when we handle the orchestration ourselves). The performance is likely due to the low maximum concurrency limit for the Map task and other AWS Step Function limits. If you’re determined to use Step Functions, folks have found less-than-elegant workarounds for these issues, however we could not justify proceeding down this path given how easy it is to do the orchestration ourselves.

Conclusion

We learned that we can indeed support highly parallelized workflows on our Serverless architecture. Identifying the right level at which to parallelize is key; too high and you’re not going to see perf improvements, but too low and the overhead of splitting and merging will exceed the gains you got from parallelization. We found that assembling our own lambdas worked well, and other architectures we tried did not work well.