Tableau LangChain: Build highly flexible AI-applications that extend your Tableau Server or Cloud Environment

Tableau LangChain is an open-source project designed to help Tableau customers build AI agents and applications that run within their security boundaries, leverage the model and model providers of their choice, and connect to the Tableau Server of their choice. Using Tableau LangChain you can leverage the data you trust in Tableau within your AI applications and integrate AI capabilities back into the Tableau product.

Key benefits:

- Configurable: Conforms to your unique security and IT constraints.

- Flexible: Bring an AI model of your choice, hosted locally or from a third-party provider.

- Open: Build on existing tools, collaborate with the #DataFam, and contribute improvements back to the community.

What is Tableau LangChain?

Tableau LangChain implements a collection of Python classes and methods designed to work with the LangGraph framework. These classes and methods wrap Tableau’s APIs with Python functions, which become tools that can be leveraged within LangChain and LangGraph.

You can think of it like a toolkit that helps AI agents, or an LLM, talk directly to your Tableau data. For example, the simple_query_datasource method is implemented using the LangGraph syntax and makes it simple to create a LangGraph agent that can query your Tableau published data sources using the VizQL Data Service tool. Additional tools include search_datasource and get_pulse_insight.

You can learn more about the Tableau LangChain integration in Lead Developer and Contributor Joseph Fluckiger’s blog post, Unleashing Tableau’s Semantic Layer with AI Agents.

Tools are an essential component of an AI agent because they enable agents to accomplish tasks that go beyond the scope of a standard response from an LLM. For example, if you’ve tried ChatGPT’s Deep Research feature, you have seen how ChatGPT will use web search as a tool to get more information and then come up with a higher quality and more accurate answer to your question.

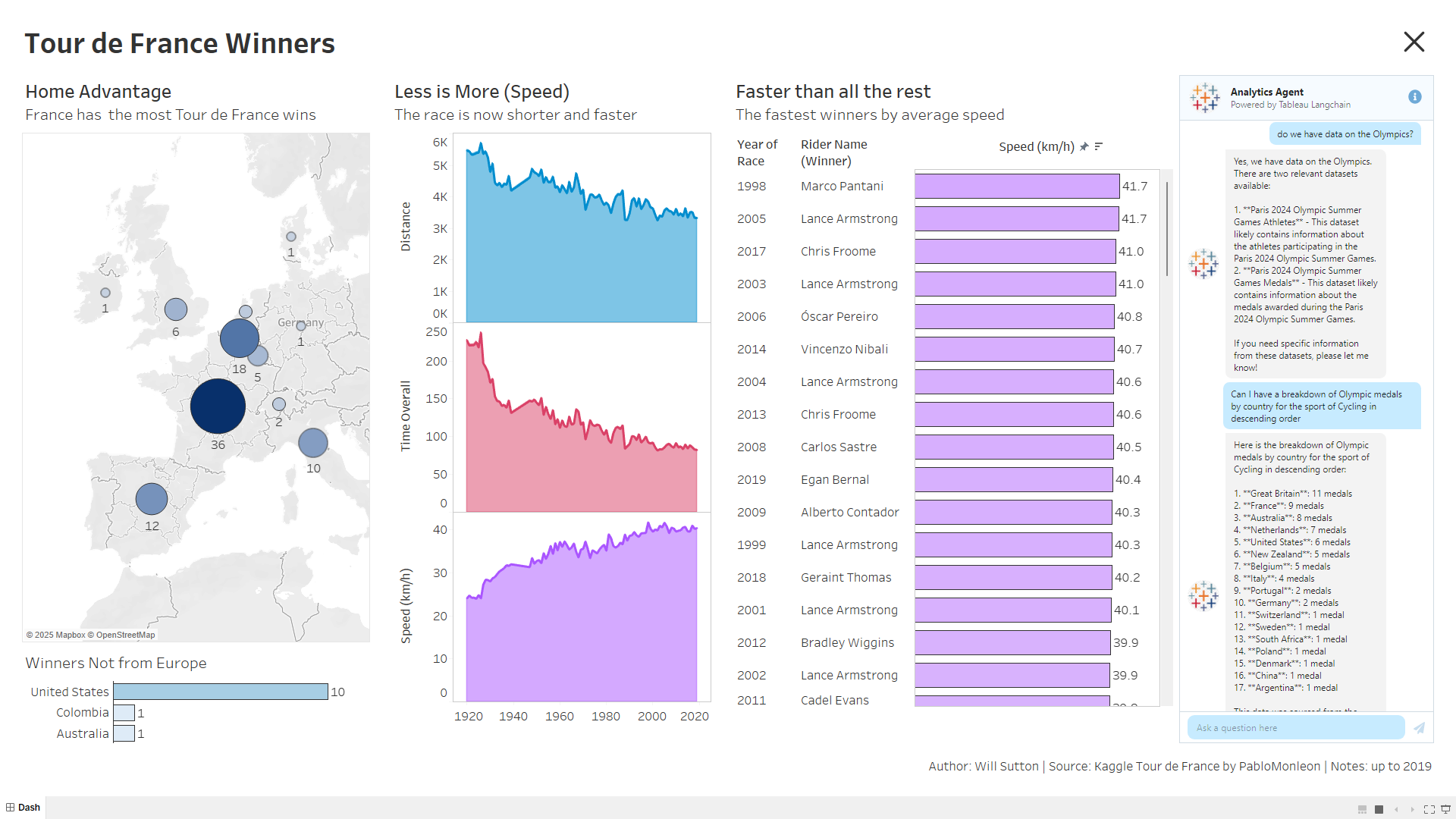

Similarly, if you build a LangGraph agent that has access to the search_datasource and simple_datasource_qa tools, this would give the LLM the ability to search for data sources on your Tableau Server and then query them. At Tableau Conference 2025, I demoed this as a way a dashboard user could bring in new data. For the agent, the query "Do we have data on the Olympics?" would invoke a tool to search for data sources, and a follow-up query "Can I have a breakdown of medal counts by country?" would invoke the query data tool, and return the data via a dashboard extension.

The Tableau LangChain is a community partnership, so developers at Tableau are working with community members to build and develop agentic tools and AI applications.

Examples highlighted at Tableau Conference 2025 include:

- Dashboard Extensions: Query underlying data dynamically to answer ad hoc questions on your dashboard's data.

- Vector Search: Enhanced search capabilities using semantic understanding, for example, searching for "healthcare" retrieves data on "NHS Prescriptions".

- Report Writer: An agent that will analyze a data source and write a report on the data to a local file.

The project is hosted publicly on GitHub and published to the PyPi registry under langchain-tableau, following conventions for integrations to LangChain.

How Tableau LangChain Works

By leveraging existing Tableau APIs, including the Metadata API, Pulse REST API, and VizQL Data Service, LangChain enables AI models to accurately interact with Tableau data.

- Metadata API: Identifies content on your Tableau Server or Cloud, providing essential context to AI models. This ensures queries are accurate by understanding available columns and data structures.

- Pulse REST API: Streamlines insight generation for AI models by directly accessing trend analysis, key drivers, and contributions to metric changes. This integration enables consistent, efficient, and accurate responses.

- VizQL Data Service (VDS): Offers programmatic, visualization-free access to Tableau data sources, enabling AI models to directly retrieve data by converting natural language queries into precise, programmatic requests.

If you combine these APIs you can create a very new experience for your users using the data you trust from Tableau. In the example below, our lead developer on the project, Stephen Price, has built a website with an embedded chat module, so the user can ask their queries to an AI, and you'll see responses drawn from the Metadata, Pulse and VizQL Data Service APIs. Try it for yourself on Embed Tableau.

Getting Started with Tableau LangChain

I highly recommend starting with the Hands-On Training notebooks from the Tableau Conference. Here you’ll find details on getting a development environment ready on your local machine.

For working through these notebooks, I recommend having a Tableau Cloud, or Tableau Server 2025.1 or later, so that it will have access to VizQL Data Service API, allowing you to use the Tableau Data Source Q&A tool, as demonstrated in notebook 3. With the Tableau Developer Program you can have access to a Tableau Cloud sandbox environment, perfect for working through these notebooks.

Next would be configuring your .env file of environment variables, these are the keys that will be used to access content on your Tableau platform. Using the .env.template file you would need to update:

- OPENAI_API_KEY, this is an API key from OpenAI’s developer portal to call an LLM

- LANGCHAIN_API_KEY, this is an API from LangSmith to track the AI agent’s responses

- Everything under Tableau Environment, this will require you to create a Connected App, and find the LUID (identifier) for a data source on your Tableau Server or Cloud environment

Finding a Data source LUID

- On your Tableau Server/Cloud navigate to External Assets in left-hand menu

- In the top-right of the screen click Query metadata (GraphiQL) to go to the GraphQL interface

- Enter the following GraphQL query, which will search for any sources called “Superstore”. Press play in the top-left to run this query and record the LUID in your .env file. Note, you can change the search query to find any data source of interest.

query Find_Datasources_LUID {

tableauSites {

name

publishedDatasources(filter: { name: "Superstore"}) {

name

projectName

description

luid

uri

owner {

username

name

}

hasActiveWarning

extractLastRefreshTime

extractLastIncrementalUpdateTime

extractLastUpdateTime

}

}

}

Now you’re all set.

- Run [Notebook 1] to verify your environment is ready

- [Notebook 2] provides an introduction to the open-source LangChain and LangGraph frameworks

- [Notebook 3] let’s you run the Tableau Data Source Q&A tool against your chosen data source

- [Notebook 4] shows you how to create a report-writing agent using tools from the LangChain community

- [Notebook 5] helps you create an integration with Slack with the Slack LangChain Tool.

Join the Tableau LangChain Community

The Tableau LangChain project is a Tableau and community initiative. The project thrives on community collaboration and understanding the problems you face. To learn more, start building AI applications and get involved in the project:

- Contribute to the [GitHub repository]

- Join us on the data dev Slack channel #tableau-langchain

- Or watch out for updates at the AI + Tableau User Group

We’re excited to see what you create with Tableau LangChain. Join us today to expand the AI capabilities of Tableau Server and Tableau Cloud.