Software Defense Spending

First, a brief history. Like many tech companies, Tableau has invested heavily in E2E automation as a daily quality indicator, which left us with thousands of desktop tests written in Python 2.7. These tests still run on the daily promotion candidate build under several configurations but are not part of our checkin quality gates. As tests that exercise the application through the UI or command system, this code is completely independent of the development code and generally has a different set of engineers with unique skills and interests. The value of each test has ranged from essential to redundant, and while I will certainly touch on a larger conversation to be had about where a team, organization, and company should focus its testing efforts, most of this article focuses on how my team eliminated most of these tests.

When Python 2.7 ceased to be supported, the company decided to port our tests to Java, forcing us to weigh the benefits of each test against the cost of reimplementing it in Java. The decision to port our tests to Java certainly deserves its own post so I’ll again defer. Essentially, we had to decide whether to port the test to Java, if it made better sense to cover the scenario upstream in a module or unit test, or whether we could eliminate it.

We asked ourselves many questions, but they can mostly be distilled to the following:

- Does this test exercise a unique path in our product? In other words, is this test coverage provided elsewhere?

- From a cost / benefit analysis, where is the best place for this coverage? Unit/module/integration tests add unique value (run on each checkin, no developer bootstrap required) but often carry a much higher engineering cost. E2E tests have many benefits as well. For example, we’ve found these tests being critical in validating refactoring efforts even in modules with great unit test code coverage. Drawbacks have included test reliability and longer validation times due to test setup, UI and command synchronization, and teardown.

- Can we live with a coverage gap? For example, if the test would only discover a small styling issue with a corner case data source, we likely would invest our time elsewhere.

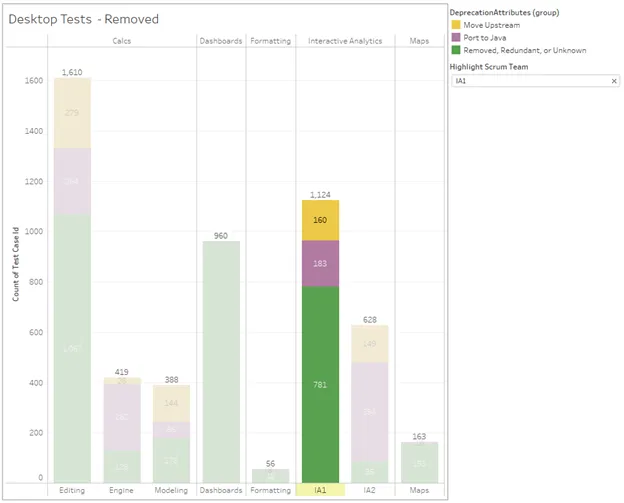

Here is a high-level summary of the analysis broken down by feature team (my team is IA1):

There is quite a bit of variance amongst the teams. This is due to inherent variability in judgement, certainly, but it’s also largely due to the varying needs of each team. For example, one team historically needed protection against changes from other teams so keeping a disproportional number of E2E tests made sense, particularly with those other teams not interested in investing a large engineering effort to move the tests upstream. Another team dedicated more resources to the project, mobbing as a team for several months to move tests upstream to module and unit tests.

Focusing on my team, we went into this exercise treating our E2E test coverage as the way I view the US defense budget: excessive, but much of it necessary. You don’t want to be the one slashing the budget just before a catastrophe happens. We could have easily said to port all of the tests to ensure that we wouldn’t cut anything of value, but that would be deferring the cost to others to do work that we didn’t want to do ourselves. This wasn’t consistent with our ‘We Work As a Team’ value. Also, if you’ll indulge my defense budget analogy further, we didn’t want to rebuild a bunch of ported and landlocked aircraft carriers and jets so cuts were desired.

Instead, we decided to treat this as an opportunity to familiarize ourselves with the legacy code, which we occasionally need to dive into for defect fixes. So, this investment had value outside of this E2E test deprecation exercise. It’s important to note that we had already shifted away from excessive E2E automation in more recent features so it only stood to reason that we would do the same for legacy features. To illustrate, here is a chart of our features over time, sized to the number of E2E desktop tests we had:

Look at those big bubbles in the 2015–2016 time frame. What happened here versus starting in 2017? Certainly, each feature is unique, and there are exceptions. However, we generally weren’t implementing less complicated features over time. In fact, the Set Control was a multi-year project, and Set and Parameter Actions have a large footprint across multiple Tableau features. Essentially, the team decided to take a more precise approach to testing and make larger investments upfront in module and unit tests. For those legacy 2015–2016 features, we would identify variables and their domains like this:

· Variable 1 (e.g. data type): integer, float, Boolean, date, date time, string

· Variable 2 (e.g. legend type): size, color, shape

There would be test suites with all 18 combinations of the two variables. There is certainly merit to this on cursory evaluation. Sometimes you don’t know what you don’t know, particularly in this type of blackbox testing, so taking a shotgun approach can be pragmatic, particularly if you can pack these into a data driven test (DDT) that abates the upfront engineering cost. Part of writing test automation is defect discovery and putting those scenarios down for future guardrails can be important. So, this isn’t to disparage the past but rather recognize that while the intent was sound, a lot of these tests from 2015–2016 are likely redundant. We still needed proof, and it was just a matter of doing the work.

- Find the entry points into the features

- Set some breakpoints

- Step through

- Find references to the underlying functions and see if they’re already being tested

- Evaluate bugs attributed to these tests’ failures. Were we getting one-off defects or were all 18 passing and failing together?

For example, regarding legend types: color, shape, and size. While certainly there is merit to testing each of those at a rendering level, it did not make a difference in how our features behaved; there were no unique code paths forked from the legend type. As another example, copy and paste utilized common parsing and formatting functions already tested in other E2E scenarios. While testing the copy and paste engine has merit, writing a bunch of tests specific to our features was redundant to the parsing and formatting tests we already had. Finally, while there were certainly a handful of bugs attributed to this suite of tests, there were no unique failures to any variant, and they were mostly test case maintenance issues.

So, we ultimately decided to keep 344 of the 1132 tests with a little over half of those to keep as E2E tests. We may have been able to make deeper cuts, but, frankly, analyzing test cases full time for several months got tedious, and we wanted to do some more interesting work. We also decided to err on the side of caution, and we may find deeper cuts as we port those tests. So, we were happy with getting rid of 70% of our tests. As an added benefit, this was the first opportunity for our test engineers to work in the development code, widening their breadth of technical skills. Some of them even liked it! This kind of potential career growth for test engineers is a related topic of interest that I may touch on in a future post.

As of 7/2022, we have a vendor team working on the 184 items to port to Java and with a few dozen already complete. When we work on the 160 tests to move upstream is still a matter of priority, but the items are in the backlog. Getting started is often the most difficult part, but once your team decides to take a look at its E2E test inventory, I hope this can serve as an example of how to be pragmatic. Of course, the biggest risk is losing some coverage that would have caught a bug so we cannot immediately tell how successful this endeavor was. However, being frank about the trade-offs and not letting perfection stand in the way of progress has already made us more efficient. In the worst case scenario of letting a bug slip by, we’ll be honest about it, fix it, learn, and continue to move forward.