August 15, 2014

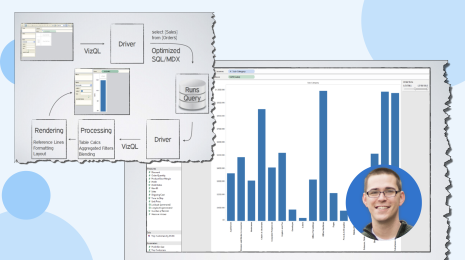

Tableau Data Extracts - Tips, Tricks and Best Practices

This is the last post in a three-part series about Tableau data extracts. In the first post, we looked at how Tableau data extracts are built and used by Tableau. In the second, we looked at why you would want to use TDEs and some sample use cases. In this last post, we will look at tips, techniques and best practices.

Note: This is the third installment in our series. View the first and second installment to learn more about Tableau data extracts.

In the first post, we looked at how Tableau data extracts are built and used by Tableau. In the second, we looked at why you would want to use TDEs and some sample use cases. In this last post, we will look at tips, techniques and best practices.

Some General Things to Keep in Mind:

- If you hide fields in Tableau after creating an extract and before creating an incremental or full refresh, the fields will continue to be hidden but are not physically removed from the extract. The hidden fields can be unhidden at any time.

- Tableau Desktop uses the location pointed to by the system’s %TEMP% environment variable for all temporary files used in the process of creating extracts. Tableau Server uses the folder

:\ProgramData\Tableau\Tableau Server\data\tabsvc\temp.

- Tableau data extracts attempt to retain the case sensitivity of the source data. For instance, string comparisons are by default case insensitive in SQL Server but case sensitive in Oracle. Extracts based on those data sources would match their respective case sensitivity for the purpose of making string comparisons.

- A user with the permission to schedule an extract refresh can change the type of the refresh from incremental to full. To prevent users from being able to schedule full extract refreshes during business hours, make sure to configure your schedules accordingly.

- A Tableau extract written to a contiguous area of free space will be more performant than one written in fragments across a hard drive. Regularly defragmenting a Tableau Server’s hard drive or using SSD drives are two ways to insure that extract files are contiguous to the extent possible.

- As of version 8.1, the Tableau Data Engine API can be used to create both full and incremental extracts.

- In a Tableau Server environment, it’s important to make sure that the backgrounder has enough disk space to store existing Tableau extracts as well as refresh them and create new ones. A good rule of thumb is the size of the disk available to the backgrounder should be two to three times the size of the extracts that are expected to be stored on it.

- Tabcmd (a command-line utility) can be used to refresh extracts, as well as to publish TDEs to Tableau Server

Tips for Incremental Refreshes

- Incremental extracts retrieve only new records from the data source, reducing the amount of time required to create an up-to-date extract. If there are no new records to add during an incremental extract, the bulk of the processes associated with performing an incremental extract still execute. Incremental extracts become less performant over time. This is because, by definition, incremental extracts only grow in size, and as a result, the amount of data and areas of memory that must be accessed in order to satisfy requests only grow as well. In addition, larger files are more likely to be fragmented on a disk than smaller ones.

- When performing an incremental extract, records are not replaced. Therefore, using a date column such as “Last Updated” to drive an incremental refresh could result in duplicate rows in the extract.

- When creating an incremental refresh against an Excel data source, only Date columns will be available to use for defining new rows. This is because Excel is not strongly typed, and Tableau to does know with certainty that a column that contains numbers contains only integers.

- Incremental refreshes are not possible after an additional file has been appended to a file based data source because the extract has multiple sources at that point.

- When publishing an extract that will not or should not be refreshed, connect directly to the extract file as a data source before publishing.

Tips for Aggregated Extracts

- Use caution with aggregations such as COUNTD or other non-additive aggregations. In order for Tableau to be able to recalculate a distinct count, it has to bring all of the unique members of the base field into the extract. For instance, if an aggregated extract included COUNTD(Customer_ID), the group by clause of the SQL query used to retrieve data from the underlying source would include Customer_ID.

- Use caution with row level calculations that involve a parameter. Since the value of the parameter can change, Tableau includes all fields referenced in row level calculations on the extract’s Level of Detail.

- “Number of Records” is special in aggregated extracts. Its default aggregation is SUM. If using Number of Records after creating an aggregated extract, it will show the number of records in the underlying data source - i.e. the original number of records. This is actually very useful for many types of secondary calculations that might depend on knowing the original rows in the underlying source. To know how many rows are in the extract, simply change the default aggregation to COUNT.

Data Source Considerations

- When extracting data from SAP BW, be aware that some limitations exist.

- When creating an initial extract from a Salesforce.com data source, Tableau retrieves all objects and the extract creation can be time consuming – several hours in some cases. Allow the initial extract to complete and use incremental refreshes to keep the data up-to-date.

In summary, be sure to keep in mind these simple best practices:

- Use full refreshes when possible

- Incrementally refreshed extracts should be fully refreshed at regular intervals (e.g. every weekend or monthly) in order to maximize performance

- Publish extracts to Data Server to help avoid redundant extracts in the Tableau Server environment

- Hide unused columns before creating an extract in order to speed extract creation and to preserve storage space

- Make sure there is enough contiguous free disk space for the largest extract in use in order to optimize extract performance. One way to achieve this is to use SSD drives as they do not become fragmented (a function of how data is retrieved from an SSD, not of where it is stored).

Hopefully these posts have helped you to better understand TDEs and as a result, to make more effective use of them. Many thanks to Marc Rueter, Russell Christopher and David Spezia from Tableau for their very valuable contributions to this series of posts.

Happy Vizzing!