Understanding Tableau Data Extracts

This is the first post in a three-part series that will take a large amount of information about Tableau data extracts, highly compress that information, and place it into memory — yours.

Note: This is the first installment in our series. View the second and third installment to learn more about Tableau data extracts.

This is the first post in a three-part series that will take a large amount of information about Tableau data extracts, highly compress that information, and place it into memory—yours.

Or better yet, it will make that information available to you so you can grab what you need now and come back later for more. That’s much closer to the architecture-aware approach used by Tableau’s fast, in-memory data engine for analytics and discovery.

What is a Tableau Data Extract (TDE)?

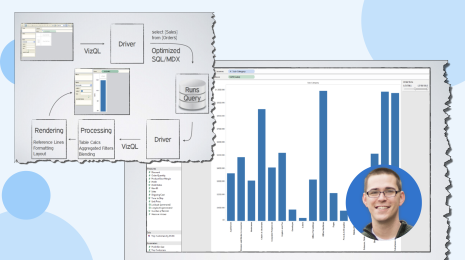

A Tableau data extract is a compressed snapshot of data stored on disk and loaded into memory as required to render a Tableau viz. That’s fair for a working definition. However, the full story is much more interesting and powerful.

There are two aspects of TDE design that make them ideal for supporting analytics and data discovery. The first is that a TDE is a columnar store. I won’t go into detail about columnar stores – there are many fine documents that already do that, such as this one.

However, let’s at least establish the common understanding that columnar databases store column values together rather than row values. As a result, they dramatically reduce the input/output required to access and aggregate the values in a column. That’s what makes them so wonderful for analytics and data discovery.

Figure 1 - A columnar store makes it possible to quickly operate over the values in any given column

The second key aspect of TDE design is how they are structured which impacts how they are loaded into memory and used by Tableau. This is a very important part of how TDEs are “architecture aware”. Basically, architecture-awareness means that TDEs use all parts of your computer’s memory, from RAM to hard disk, and put each part to work as best fits its characteristics.

To better understand this aspect of TDEs, we’ll walk through how a TDE is created and then used as the data source for one or more visualizations.

When Tableau creates a data extract, it first defines the structure for the TDE and creates separate files for each column in the underlying source. (This is why it’s beneficial to minimize the number of data source columns selected for extract).

As Tableau retrieves data, it sorts, compresses and adds the values for each column to their respective file. With 8.2, the sorting and compression occur sooner in the process than in previous versions, accelerating the operation and reducing the amount of temporary disk space used for extract creation.

People often ask if a TDE is decompressed as it is being loaded into memory. The answer is no. The compression used to reduce the storage requirements of a TDE to make them more efficient is not file compression.

Rather, several different techniques are used, including dictionary compression (where common column values are replaced with smaller token values), run length encoding, frame of reference encoding and delta encoding (you can read more about these compression techniques here). However, good old file compression can still be used to further reduce the size of a TDE if you’re planning to email or copy it to a remote location.

Figure 2 - Compression techniques are used to further optimize the TDE columnar store. Each column becomes a memory-mapped file in the TDE store

To complete the creation of a TDE, individual column files are combined with metadata to form a memory-mapped file — or to be more accurate, a single file containing as many individual memory-mapped files as there are the columns in the underlying data source. This is a key enabler of its carefully engineered architecture-awareness. (And even if the term is unfamiliar, you’ve come across memory-mapped files before. They are a feature of any modern operating system (OS). Read more about them here.)

Because a TDE is a memory-mapped file, when Tableau requests data from a TDE, the data is loaded directly into memory by the operating system. Tableau doesn’t have to open, process or decompress the TDE to start using it. If necessary, the operating system continues to move data in and out of RAM to insure that all of the requested data is made available to Tableau. This is a key point - it means that Tableau can query data that is bigger than the available RAM on a machine!

Only data for the columns that have been requested is loaded into RAM. However, there are also some other subtler optimizations. For example, a typical OS-level optimization is to recognize when access to data in a memory-mapped file is contiguous, and as a result, read ahead in order to increase speed access. Memory-mapped files are also only loaded once by an OS, no matter how many users or visualizations access it.

Since it isn’t necessary to load the entire contents of TDEs into memory for them to be used, the hardware requirements — and so the costs — of a Tableau Server deployment are kept reasonable.

Lastly, architecture-awareness does not stop with memory – TDEs support the Mac OS X and Linux OS in addition to Windows, and are 32- and 64-bit cross-compatible. It doesn’t get much better than that for a fast, in-memory data engine. If you’re interested, you can read about other important breakthrough technologies in Tableau here.

Now that you understand that a TDE is what makes a TDE such a technical breakthrough, we’re ready to turn our attention to why to use them and some example use cases. We’ll cover those topics in the next article in this three-part series.