Interweaving Multimodal Interaction with Flexible Unit Visualizations for Data Exploration

IEEE TVCG 2020 (also presented at IEEE VIS 2020)

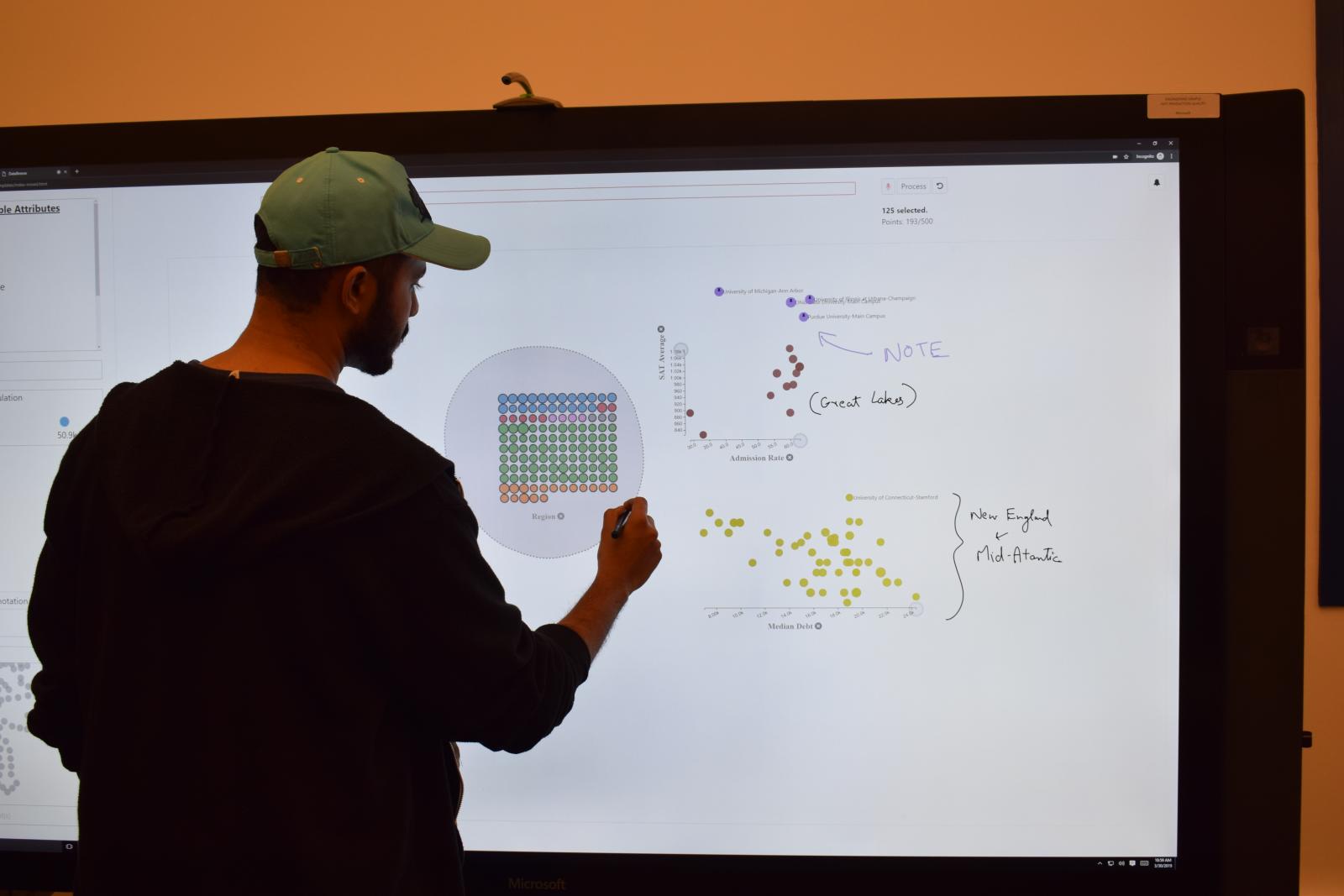

Multimodal interfaces that combine direct manipulation and natural language have shown great promise for data visualization. Such multimodal interfaces allow people to stay in the flow of their visual exploration by leveraging the strengths of one modality to complement the weaknesses of others. In this work, we introduce an approach that interweaves multimodal interaction combining direct manipulation and natural language with flexible unit visualizations. We employ the proposed approach in a proof-of-concept system, DataBreeze. Coupling pen, touch, and speech-based multimodal interaction with flexible unit visualizations, DataBreeze allows people to create and interact with both systematically bound (e.g., scatterplots, unit column charts) and manually customized views, enabling a novel visual data exploration experience. We describe our design process along with DataBreeze’s interface and interactions, delineating specific aspects of the design that empower the synergistic use of multiple modalities. We also present a preliminary user study with DataBreeze, highlighting the data exploration patterns that participants employed. Finally, reflecting on our design process and preliminary user study, we discuss future research directions.

Tableau-Autor(en)

Autor(en)

Bongshin Lee, John Stasko