How communication and documentation paved our yellow brick road to the cloud

In our previous post in this series detailing Tableau’s migration to the cloud, we reflected on the staffing, governance, and some of the more technical aspects of our migration. In our final post, we’d like to share thoughts on the documentation and communication efforts we took to keep everyone up to speed and accountable to our migration timeline.

Communication and documentation: Critical measures to successfully migrate

With approximately 9,500 workbooks and 1,900 data sources to move, we relied on consistent communication and clear migration documentation, customized for analysts and their business units. Our goal was to ensure that all data and content owners would understand what was expected of them, including the project deadlines, as well as what helpful resources were available to them if they hit a roadblock. Without this critical communication, our migration would have been impossible to manage.

Communication

We can’t overstate how necessary it was to have thoughtful, clear communication at regular intervals. Communication aligned the core migration team landing the data with the extended team of data scientists, engineers, and subject matter experts whose Tableau content (and other analytical assets) depended on that data. It also aligned the stewards of the data with their consumers.

Tableau employees represent a broad swath of Tableau product usage knowledge spanning from absolute beginner to Zen Master level. That meant two sets of communication were necessary to ensure the right audience received the right message. By also having a list of the most important Tableau content and analytics assets that needed to migrate to Snowflake, we could better anticipate incoming questions and proactively develop more targeted communication.

For the stewards who own important data sources and dashboards, we held weekly meetings to crowdsource their expertise and to ensure that they felt supported. In these meetings, we would strategize, air concerns, brainstorm workarounds, share best practices, and build clear processes for testing and signoff of data and the work connected to it.

The much larger audience of data consumers knew this change was coming, but the average person didn’t know what it meant for them. For this audience, we established more general communication with the goal of informing but not overwhelming them with too many details. Additionally, we held office hours, and due to their popularity, encouraged folks to seek migration assistance during Data Doctor appointments—a different program involving one-on-one sessions where Tableau experts solve technical challenges and get dashboards in tip-top shape.

Our dedicated project manager oversaw technical aspects of the timeline and communication, but we didn’t have a separate person to focus solely on the communications across the project. Because of the large size of our deployment, we should have made these distinct roles. In our experience, the technical work consumed more time and resources, resulting in less attention to or deprioritized communication.

Recommendations

- Communicate early, often, and in multiple places: Inevitably, more people than you expect will ask: “Why hasn’t my data been migrated yet?” or “What’s the final deadline for shutting off the old database?” So communicate, communicate again, and then communicate one last time. Additionally, plan on communication formats to cover a variety of mediums, from email and Slack, to Tableau data quality warnings in server, to banners on the wiki front pages.

- First things first: Many teams who had not strongly invested in governance found themselves quickly overwhelmed with the amount of content they were responsible for. But not all of this content was critical to keep. In these situations, we advised stewards to identify tiers to differentiate between the critical content, the important but not critical, and the unimportant. Broad socialization combined with stakeholder signoff that only certain tiers of content would be prioritized also helped ensure business continuity.

- Prioritize a migration communication lead: This person should oversee the communication and documentation efforts, thinking about how, when, and which mediums to use for connecting with everyone. This person should ideally have experience working with a wide variety of teams that produce analytical content in Tableau and be comfortable troubleshooting Tableau Desktop issues. They should also be a “people connector” to help ensure that communication efforts are built on and not duplicated.

- Consider the stragglers: In a self-service environment and with a change this big, ad-hoc and reactionary work can’t be avoided, so plan for it. While we communicated the server shutoff as a hard deadline, we also planned for a small group to continue having access beyond the deadline. Any content that was completely forgotten was funneled through them, and in exchange, they received extra support for contingencies.

Documentation

Providing documentation with clear steps is a requirement that spans the entirety of the data lifecycle. Engineers and DBAs need clear coding and naming guidelines, content owners need to know when and how to migrate their content, and data consumers need to know whether the content they rely on has been migrated. It’s not easy to proactively identify all the scenarios that people will encounter in the change. Instead, focusing on documentation that outlines the most common scenarios (like replacing a data source) can help save time, and ultimately, get all data and content feeding into the same platform for one source of truth.

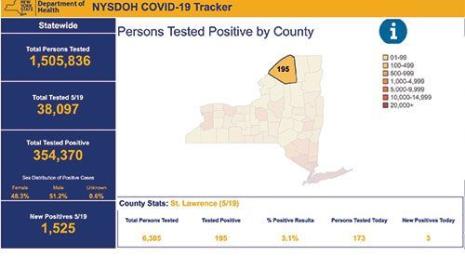

To support targeted documentation for all audiences, we had multiple wiki pages with clear instructions guiding them through their data and content migrations. We regularly updated these as issues surfaced (e.g. user lockout with failed authentication) and resolved any confusion navigating the content by linking keywords throughout for a better user experience. Our other documentation resources included Slack channels and some helpful Tableau dashboards, which we highlight and describe below.

This dashboard visualized data source and workbook connections to our old server (grey) versus the new server (blue); we used this to communicate the rate of adoption of our new server.

Below, a similar viz by project identified teams that needed extra help to meet our deadlines.

Lastly, we created a dashboard to help users test the data (containing row counts for database objects in both environments, last refresh date, and data type) along with the dashboard below that shows users what content needs to be migrated (filtered to only display the content they own).

Recommendations

- Identify staffing escalation paths: When more involved migration issues surface that require a greater level of support, have a clear escalation plan. This should include staffing resources who can dedicate the appropriate time and attention to resolve the issue.

- Have comprehensive documentation: Whether it’s a comprehensive document or a set of connected wiki pages, have a place where your critical information lives to represent the hard work of the core and extended teams. We found it most helpful to create wiki pages that covered the questions that two audiences (one technical, one not) would want to know.

- Have fun too! While a database migration is nobody’s idea of fun, don’t miss this opportunity to further engage with your users. We conducted a data scavenger hunt with prizes for the correct answers. Since documentation can be long and technical, you could add an “easter egg” in your documentation, or you could gamify the process of migrating by team.

Our work’s not done...

Speaking from experience, moving data to the cloud wasn’t a simple process, but the work and time were worthwhile—and our story isn’t over. The collective efforts of many champions managing and doing the work to successfully transition our data and content to Snowflake means we’re now more unified in our source of truth. We also have greater flexibility with our cloud platform and data sources to support new and exciting analytics use cases.

We’re now working to migrate our enterprise marketing data into Snowflake and develop a completely new data platform that offers the marketing team improved stability of their data pipelines, faster data access, and a performance boost in business critical data sources. Currently, we’re in auditing mode, assessing what we want and need to migrate given our rich data history.

“We’re planning how we want to architect the new platform and the data flow from sources like LinkedIn, Twitter, and Google Analytics, and then push that into Snowflake to perform analytics that support greater innovation benefitting our Data Engineering team and the entire marketing organization,” said Maryam Marzban, Manager, Marketing Data Engineering. This is our last leg of the journey to having one trusted system that has already increased our data’s value and facilitates more responsible use. We’re nearly there!

If you missed our previous posts in this series, jump back to read more about:

Storie correlate

Subscribe to our blog

Ricevi via e-mail gli aggiornamenti di Tableau.