Web Scraping for Everybody: Using the Import Functions in Google Sheets with Tableau Public 10

The good news is that this year aliens haven’t yet come down to Earth yet, whereas last year there were two UFO sightings. How do I know? Wikipedia! For your convenience, I have visualized the information below.

The really great news is that the viz will automatically refresh every 24 hours—even if the author were to get abducted by aliens tomorrow. The new Google Sheets connector in Tableau Public 10 makes this possible. Tableau Public requests a new extract of the data, which is stored in a Google Sheet, every 24 hours or whenever the author hits “request update,” and updates the viz accordingly.

How does the data get into the spreadsheet on Google Sheets in the first place? In our blog post announcing the launch of Tableau Public 10, we briefly showed that you can pull in web data using ImportHTML. Here, let’s take a closer look at how that works, as well as at three related formulae.

Scraping Tables and Lists with ImportHTML

The information about UFO sightings comes from this Wikipedia page. In particular, I used the two tables about UFO sightings in the 20th and 21st centuries.

You can import a table from any website into Google Sheets with the ImportHTML function. It is surprisingly easy. Try it out.

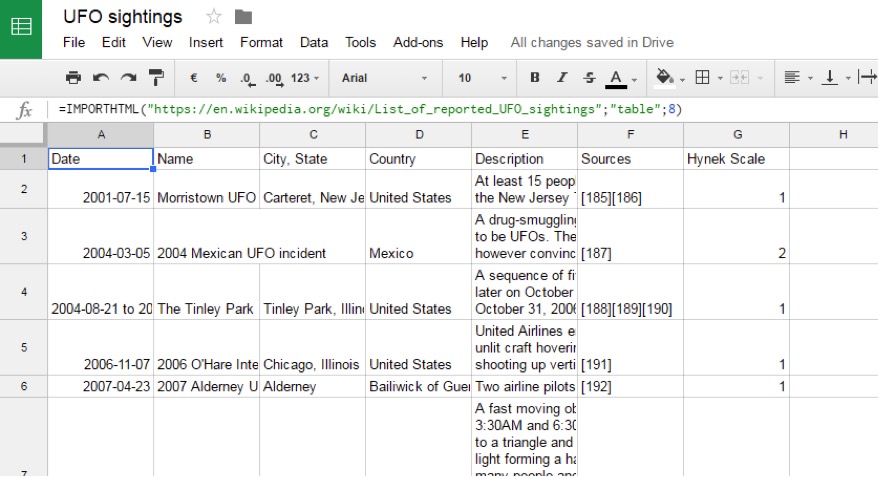

Open a new spreadsheet and type the following formula into the cell in the top-left corner: =IMPORTHTML("https://en.wikipedia.org/wiki/List_of_reported_UFO_sightings";"table";8)

Hit enter and give it a second to populate the table. It should now look like this:

We can now connect to that table in Tableau and build a viz. (Connect -> Google Sheets -> log in with Google credentials -> select table). What’s more, upon saving the viz on Tableau Public, we can tick the checkbox “keep my data in sync.” That ensures the viz will always reflects the latest information that the gremlins have entered on Wikipedia.

Let’s look at the ImportHTML formula in more detail. It takes three parameters that you can control:

- First comes the URL of the website. Make sure you put that in quotation marks. Alternatively, you can also refer to a cell that contains the URL. Wikipedia pages with tables work very well, but it can be literally any website with a table or list.

- Second, with “table,” we are telling Google Sheets that we are looking for a table in the specified website. As an alternative, you can also query lists, using “list.”

- Lastly, we need to indicate which table we want. (In the case above, it is the eighth table on the page.)

From experience, here are three issues that you might come across:

First, you can add other columns to your spreadsheets, but be careful that the ImportHTML function has enough space to populate the table.

Second and related, it is not really possible to stack several tables on top of each other. That’s because you might not know how many rows a table has, and you might overwrite the table below. Instead, use the Union feature in Tableau.

As I mentioned above, in the UFO viz we are using two tables from the Wikipedia page: one for the 20th century and one for the 21st. I duplicated the spreadsheet in Google Sheets, and on the second tab, I changed the formula to pull the seventh table, not number 8, which is the table for 20th century UFO sightings. Then, in Tableau, I created a Union using the two spreadsheets:

(For another project, I manually created 31 sheets. Let me know @FRamseger should you come up with a faster way to pull a list of URLs into Google Sheets.)

Finally, as convenient as it is to make use of crowd-sourced information on Wikipedia, beware that when Wikipedia entries are edited, the structure of the page might change.

For instance, I recently created a tracker that pulled the latest results from a large sporting event that recently took place in the Southern Hemisphere. Based on the pages for previous editions of the event, I had good reasons to believe that there would be a table on Wikipedia listing the achievements of different nations.

However, when the action kicked off, the page for the 2016 edition was being edited heavily. And twice the location of the table I was referencing changed, leaving my tracker hanging with no data. If you think the website might change, you might want to check in on your viz from time to time.

Extracting Other Information from Websites with ImportXML

What if you’d like to access information on a website that is not in a list or a table? You can do that with the ImportXML formula.

You might have noticed the footer in my UFO viz. It shows when the Wikipedia page that the viz pulls from was last updated:

I pulled this information from Wikipedia into Google Sheets using the aforementioned feature. Every Wikipedia entry has this information at the bottom of the page:

To get this into Google Sheets and hence into Tableau, I created a new spreadsheet with the following formula in one of the cells. (I used the left-most cell in the second row, so that I could add a column header above that.): =IMPORTXML("https://en.wikipedia.org/wiki/List_of_reported_UFO_sightings";"//li[@id='footer-info-lastmod']")

Hitting enter gives me this:

I then added this as a new data source in my Tableau workbook ( Data-> New Data Source). I stripped the date and time out of that sentence using custom splits on “ on “ (i.e. the word preceding the date), and created a new sheet with this new field as text. Then I added that sheet to the bottom of my dashboard.

Even if you don’t understand how this works, you can use the above formula on any Wikipedia page out there—just replace the URL. Nonetheless, let’s have a look at the formula in a little more detail to gain some basic understanding of how it works. This one only has two parameters:

- Same as before, the URL of the website in question.

- The so-called x-path query. This one might sound a little more esoteric, but is not that hard to understand. An x-path is essentially a reference to an element in the website html code. Like folders on your hard drive, the html-tags are usually nested. The x-path gives the direction to the element you want. In the above example, we are looking for the list element (li) with the ID “footer-info-lastmod.”

A handy trick to get the x-path of an element is to use the “inspect” function of your browser. In Chrome, for instance, you can select the element, right-click, and choose “inspect” in the menu. In the right-hand panel, the html code related to that part should already be highlighted. Right-click on it, and select copy - > copy x-path.

Once you get the hang of it, there are lots of cool things you can do with ImportXML. The sky is the limit, but here one more example to get your creative juices going: Try grabbing the number of followers from your Twitter profile.

Importing CSV Files with ImportData

Of course, many websites don’t spit out data in forms of tables but as a downloadable file. Using the ImportData function, you can get comma-separated (CSV) and tab-separated (TSV) text files into Google Sheets.

This formula is also very simple—all you do is you put the URL inside the brackets, like this: =IMPORTDATA("http://apps.who.int/gho/athena/data/xmart.csv?target=GHO/WHS5_122&profile=crosstable&filter=COUNTRY:*;REGION:*&x-sideaxis=COUNTRY&x-topaxis=GHO;YEAR;RESIDENCEAREATYPE")

Don’t forget those quotation marks if you are writing it directly into the formula.

Importing News Feeds with ImportFeed

Lastly, you can use ImportFeed to query atom or RSS feeds. For example, to pull the news feed of NASA’s Mars Exploration Program into a spreadsheet, use this formula: =IMPORTFEED(“http://mars.nasa.gov/rss/?feed=news&category=all”)

By default, it gives you all the items currently published by the feed. You can choose to only retrieve certain columns, or specify the number of items. See here for more information about this as well as the other formulae mentioned above.

Show Us Your Vizzes

There are many ways to get your data into Google Sheets: via manual entry, via a webscraping service such as Import.io, or via tools like Zapier or IFTTT (as described by my colleague here).

The import functions described above are a good alternative for web data that is relatively well structured and accessible, such as tables on Wikipedia.

If you want to get started with Tableau and are still looking for data, why not browse Wikipedia for an interesting topic? UFOs are not everyone’s cup of tea, but with five million pages on the English Wikipedia, there is something for everyone.

Send us your vizzes based on your favorite topic! Post them in the comments below or tweet them to us @tableaupublic.

Zugehörige Storys

Blog abonnieren

Rufen Sie die neuesten Tableau-Updates in Ihrem Posteingang ab.